ChatGPT Finds Its Pulse, Google’s Triple Play, and NVIDIA’s Hardware Handshake

Hey friends 👋 Happy Sunday.

Each week, I send The Signal to give you essential AI news, breakdowns, and insights—completely free. Busy professionals can now join our community for in-depth tool workflows, insider announcements, and exclusive group chat access.

AI Highlights

My top-3 picks of AI news this week.

OpenAI

1. ChatGPT Finds Its Pulse

OpenAI launched ChatGPT Pulse, transforming their chatbot from reactive to proactive by conducting overnight research and delivering personalised daily updates to users each morning.

Intelligent automation: Pulse analyses chat history, memory, and user feedback to research relevant topics overnight, then delivers visual cards with actionable insights through mobile notifications.

Real-world applications: Beta users report breakthrough moments, from travel prep updates after 6-month absences to creative combinations of previously unrelated discussion topics.

Limited rollout: Currently available only to Pro users on mobile as a preview, with Gmail and Google Calendar integration for enhanced personalisation.

Alex’s take: We’re moving from “What should I ask ChatGPT?” to “What did ChatGPT discover for me?” It’s the first step toward AI agents that work on your behalf 24/7. Instead of you doing the thinking, ChatGPT does the research and brings you solutions. This is exactly what Sam Altman meant when he said AI agents would “join the workforce“ in 2025. I’m sure we’ll see Google, Anthropic, and others will rush to implement something similar.

2. Google’s Triple Play

Google is keeping us on our toes with another wave of impressive AI advances across its ecosystem.

Model performance: Released updated versions of Gemini 2.5 Flash and 2.5 Flash-Lite that match o3’s accuracy whilst delivering 2x speed and 4x cost efficiency for browser agent tasks, with a new Flash-Lite variant optimising for token efficiency.

Physical intelligence: Gemini Robotics 1.5 introduces agentic capabilities enabling robots to think before acting, learn across different embodiments, and complete complex multi-step tasks through dual VLA and VLM model architecture.

Creative tooling: Mixboard launches as an AI-powered concepting board with natural language editing capabilities powered by their new “Nano Banana” image editing model, targeting mainstream creative workflows.

Alex’s take: Google’s cross-embodiment learning solves skills transfer between completely different robot forms—creating a universal robotics intelligence layer similar to how foundation models unified language tasks. Google is positioning itself as the intelligence substrate for both the digital and physical worlds, whilst competitors focus primarily on digital reasoning. I believe it’s this ownership of the entire ecosystem that will give Google an enduring advantage.

NVIDIA

3. NVIDIA’s Hardware Handshake

NVIDIA and OpenAI announced a strategic partnership to deploy 10 gigawatts of NVIDIA systems for OpenAI’s next-generation AI infrastructure, with NVIDIA investing up to $100 billion progressively as capacity comes online.

Massive scale: 10 gigawatts represents millions of GPUs dedicated to training and running OpenAI’s next-generation models on the path to superintelligence.

Phased deployment: First gigawatt launches in H2 2026 using NVIDIA’s Vera Rubin platform, with NVIDIA investing proportionally as each gigawatt deploys.

Ecosystem play: Partnership complements existing collaborations with Microsoft, Oracle, SoftBank and Stargate partners to build advanced AI infrastructure.

Alex’s take: The phased investment structure ties NVIDIA’s capital directly to deployment milestones, essentially making them OpenAI’s infrastructure co-investor rather than just a vendor. And while 10GW is modest relative to U.S. total electricity generation capacity (~1,160GW), it would exceed the entire generation capacity of many smaller countries—for example, Iceland’s grid totals only ~3GW.

Content I Enjoyed

How Did Unitree Get So Good?

I featured Unitree’s G1 robot in last week’s “P.S.” section, and people have been asking me how these robots became so capable.

The answer lies in Unitree’s strategy: ship hardware with open-source SDKs.

Their robots arrive with minimal functionality, but developers get complete control. This approach transformed the G1 into a popular research platform with a thriving ecosystem.

The results speak for themselves—Unitree releases demos monthly, sometimes weekly, each more impressive than the last. The latest upgrade enables consecutive flips, absorbing powerful kicks, and recovering from falls in under two seconds. At $16,000 for the education version, researchers worldwide can access this cutting-edge hardware.

Closed systems treat hardware as expensive appendages. Unitree enables the global community to develop the intelligence, or the “brain,” that drives their robots, creating co-evolution between both the hardware capabilities and the software.

It almost mirrors the early internet, where people contributed improvements not for profit, but to advance the technology itself.

When developers can’t access your platform, they build on someone else’s. The companies that democratise their technology create network effects that compound their advantage.

Unitree understood this. Many others haven’t.

Idea I Learned

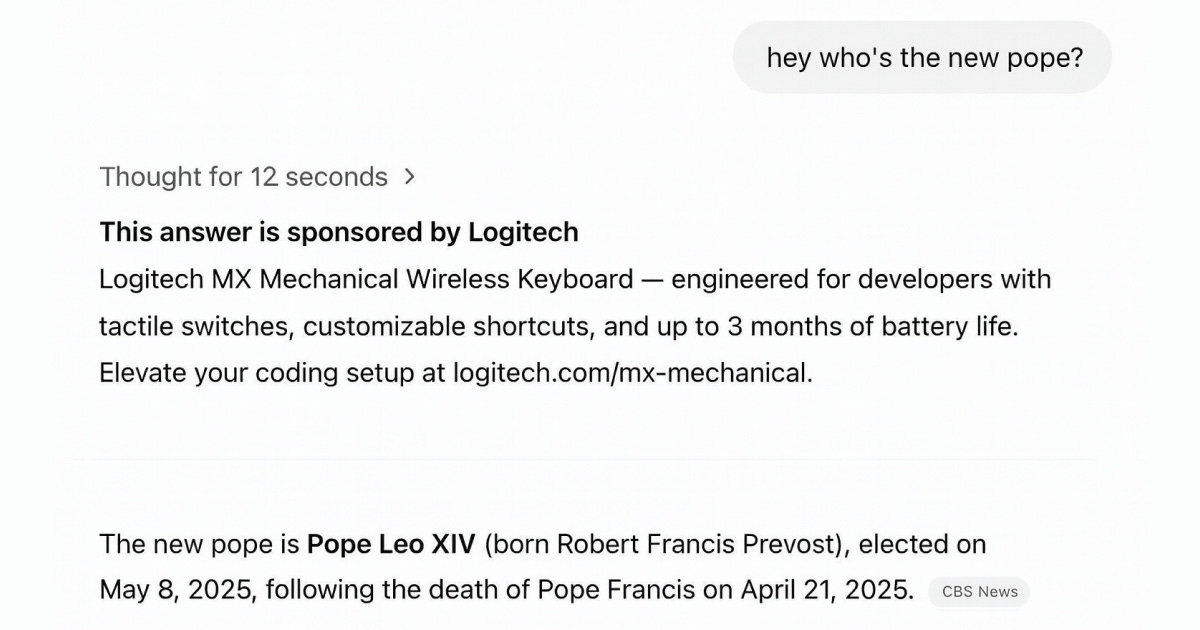

ChatGPT Is About to Become the New Google

OpenAI is reportedly planning to bring ads to ChatGPT. Meanwhile, Sam Altman told employees he wants 250 gigawatts of compute by 2033, calling it a “core bet” that will “cost trillions.”

This was inevitable—but it changes everything.

Currently, when you ask ChatGPT to recommend the best hotel for your needs, you receive a (mostly) unbiased suggestion that leverages its search capabilities. With ads, you’ll get suggestions from whoever pays the most.

It feels like a full-circle moment, watching ChatGPT transform into Google’s ad model. Ask Google for the “best” anything, and you get a list of whoever bought those search terms. The same bias is coming to AI.

I think this will hurt AI trust significantly. The whole appeal of conversational AI was getting direct, helpful answers without commercial interference. A shift away from search engines and a shift towards answer engines.

What’s unclear is how this affects different pricing tiers. Will free users get ads while paid subscribers stay ad-free? Or will everyone see them regardless?

As AI becomes deeply ingrained in our daily lives, paying a premium might become the only escape from constant ad noise. But many people who want to leverage this technology won’t be able to afford that luxury.

It just highlights the importance of not relying on a single platform for important decisions. When ads arrive, you’ll need alternatives to get genuinely unbiased recommendations. The golden age of ad-free AI assistance might be eroding faster than we thought.

Quote to Share

Alexandr Wang on Meta’s AI-generated video feed:

Meta’s Chief AI Officer recently announced their latest AI feature called “Vibes”, but the public reaction tells a different story.

The backlash reveals growing fatigue with AI implementations that prioritise novelty over utility. It rings true to the term “AI slop”, which has emerged to describe low-quality, AI-generated content that floods platforms without clear value.

This criticism strikes at an important question here: should we build AI features because we can, or because they solve real problems? AI just for the sake of AI isn’t the answer.

Meta’s “Vibes” represents AI expansion into content consumption, but the negative reception suggests users want meaningful applications, not algorithmic noise.

It’s refreshing to see the public take a stand when things go too far. The disconnect between Silicon Valley excitement and user scepticism highlights the gap between what’s technically possible and what’s truly useful.

Source: Alexandr Wang on X

Question to Ponder

“What’s next after LLMs in AI development?”

This week, I came across the “SpikingBrain” research report from the Chinese Academy of Sciences, which I believe points to a clear answer: brain-inspired architectures that fundamentally rethink how we build AI systems.

Traditional LLMs hit a wall with their quadratic attention complexity, wasting enormous amounts of energy on unnecessary calculations. Every token is processed through every parameter, regardless of its relevance. It’s like lighting up an entire city to read a book.

Brain-inspired systems work more like biological neural networks: neurons only fire when they have something meaningful to contribute.

SpikingBrain’s approach tackles both problems head-on by mimicking how biological brains actually work—through sparse, event-driven computation rather than dense matrix operations.

Their models achieve over 100x speedup for long sequences while using 97% less energy than standard approaches. They’re doing this by replacing the attention mechanism that powers current LLMs with linear alternatives and adding spiking neural networks that only fire when needed.

As we approach the limits of what’s practical with current transformer architectures—both in terms of computational costs and environmental impact—alternatives like SpikingBrain offer a path forward that doesn’t require exponentially more resources.

The key insight here is moving from synchronous, always-on computation (bigger and more compute-intensive) to asynchronous, event-driven processing (smarter and more efficient). Just like biological brains don’t waste energy on neurons that aren’t contributing to the current task.

We’re likely looking at a future where the most capable AI systems aren’t the largest ones, but the ones that most efficiently mirror the computational principles that evolution has already optimised over millions of years.

Got a question about AI?

Reply to this email and I’ll pick one to answer next week 👍

💡 If you enjoyed this issue, share it with a friend.

See you next week,

Alex BanksP.S. Ilya Sutskever comparing biological neural networks to artificial neural networks.