Hey friends 👋 Happy Sunday.

Here’s your weekly dose of AI and insight.

Today's Signal is brought to you by GrowHub.

I built my LinkedIn audience to 160K followers, and now I've turned everything I learned into an AI tool for busy professionals.

GrowHub is the AI agent for LinkedIn that builds your personal brand while you focus on your business.

Our new “custom writing styles” feature creates posts that actually sound like you:

→ Trained on thousands of high-performing LinkedIn posts

→ Learns your unique voice and writing style

→ Generates authentic content that drives engagement

→ Saves hours of content creation time

Stop struggling with what to post. Start building the personal brand that opens doors.

Sponsor The Signal to reach 50,000+ professionals.

AI Highlights

My top-3 picks of AI news this week.

1. Google's Banana Breakthrough

Google has launched Gemini 2.5 Flash Image (aka nano-banana), their state-of-the-art image generation and editing model.

Character consistency: Maintains the appearance of characters or objects across multiple prompts and edits, allowing users to place the same character in different environments while preserving their visual identity.

Prompt-based image editing: Enables targeted transformations using natural language commands—from blurring backgrounds and removing stains to altering poses and colourising black and white photos.

Multi-image fusion: Understands and merges multiple input images with a single prompt, allowing users to place objects into new scenes or restyle rooms with different colour schemes and textures.

Alex’s take: Not only have I found this to be the best-in-class model for text generation in images, but after testing the model head-to-head against ChatGPT, it blows OpenAI out of the water when it comes to speed. Typical outputs take ~1-2 minutes on ChatGPT using GPT-5. With Gemini, they take ~15 seconds. Unlike GPUs, Google’s Tensor Processing Units (TPUs) are designed specifically for AI workloads—and when it comes to compute-heavy tasks like image generation, this is where they really get to stretch their advantage. What’s more, the tool is totally free for anyone to try in the Gemini app.

OpenAI

2. OpenAI Gets Real-Time

OpenAI has launched two major updates designed to transform how developers build AI-powered applications: their most advanced speech-to-speech model and a comprehensive overhaul of their coding assistant.

gpt-realtime: A production-ready speech-to-speech model that processes audio directly without text conversion, featuring improved instruction following, natural conversation flow, and new voices (Cedar and Marin).

Enhanced Realtime API: Now supports remote MCP servers, image inputs, and SIP phone calling, enabling more capable voice agents with reduced latency.

Codex powered by GPT-5: Complete redesign includes IDE extensions for VS Code and Cursor, seamless cloud-to-local handoffs, intelligent GitHub code reviews, and a revamped CLI with agentic capabilities.

Alex’s take: The native audio processing in gpt-realtime eliminates the clunky speech-to-text-to-speech pipeline that has plagued voice interfaces, while the Codex updates create a unified coding experience across every developer touchpoint. I’m interested in seeing the developer community's response to this, especially given the recent popularity of the competing tool, “Claude Code,” by Anthropic.

Microsoft

3. Microsoft's MAI-jor Play

Microsoft AI has unveiled its first purpose-built AI models, marking a significant shift from relying solely on partner models to developing homegrown intelligence.

MAI-Voice-1: Lightning-fast speech generation model that creates a full minute of audio in under a second on a single GPU, powering Copilot Daily, Podcasts, and interactive storytelling experiences.

MAI-1-preview: Microsoft's first end-to-end foundation model, built as a mixture-of-experts system trained on ~15,000 NVIDIA H100 GPUs, now being tested on LMArena.

Strategic pivot: Moving beyond partnerships to create specialised models for different use cases, with plans to orchestrate multiple models serving various user intents.

Alex’s take: This feels like Microsoft's declaration of AI independence. While their OpenAI partnership was one for the ages, building purpose-built models shows they're not content being dependent on external providers. I’ve found the speed of MAI-Voice-1 to be particularly impressive—generating a minute of audio in under a second. Building independent moats matters more than ever before.

Content I Enjoyed

The Second Half

This week, I came across a fascinating perspective from OpenAI researcher Shunyu Yao about where we are in AI development.

His argument: we're at AI's halftime.

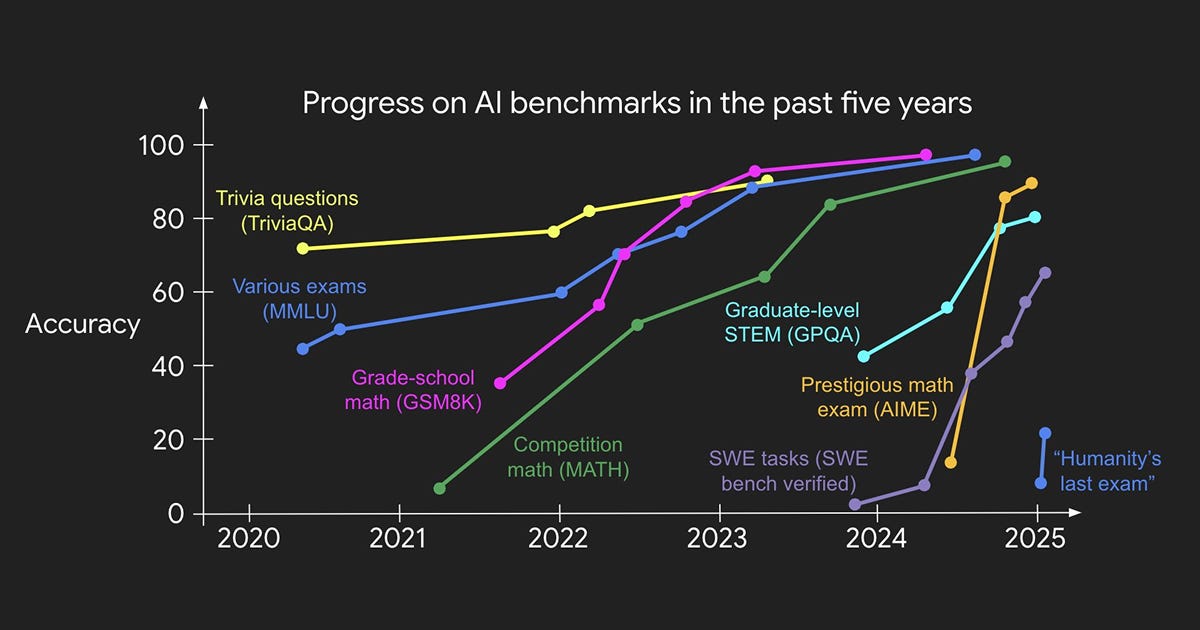

For decades, the first half was all about building better models and training methods. Think Transformers, GPT-3, the blockbuster papers that moved the needle on benchmarks. The game was simple: create new methods, beat benchmarks, repeat.

But something fundamental has shifted. We now have a "recipe" that works across domains: massive language pre-training, scale, and reasoning. The same approach that powers ChatGPT is now earning gold medals in math olympiads and controlling computers.

So what's the second half about? Instead of asking "Can we train AI to solve X?", we're asking "What should AI solve, and how do we measure real progress?"

The shift matters because AI beats humans on SATs and chess, yet GDP hasn't dramatically changed. Our evaluation setups don't match real-world conditions—we test agents in isolation rather than through ongoing human interaction, and we assume tasks are independent when they're actually sequential.

This creates huge opportunities for anyone willing to rethink evaluation.

Take, for instance, the countless life forms that have developed sophisticated intelligence without language as we know it, learning useful world models simply by interacting with their environment.

It makes you wonder—are we overestimating language’s role in the path to general intelligence? I’m excited to see what the second half has in store.

Idea I Learned

AI is Dominating Venture Capital

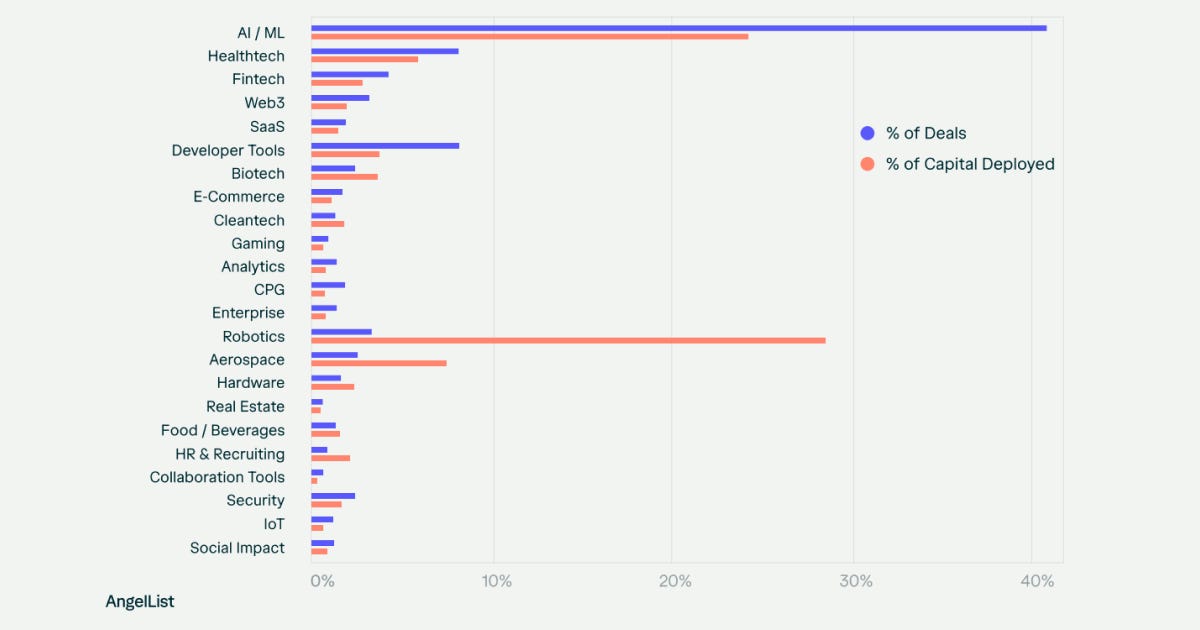

This week, AngelList (the “match dot com” for investors and startups) released its “State of Venture Report H1 2025”.

Complementary to this report, I’ve also kept a keen eye on the Setter 30, which showcases a quarterly ranking of the 30 most sought-after venture-backed companies in the global secondary market, based on buyer interest. 14 out of the 30 companies on this list (almost 50%) are in the “AI & robotics” sector.

And after reading AngelList’s most recent report, it’s clear this sector is wiping the floor.

Not only did more than 40% of AngelList deals go to AI startups in H1 (nearly double 2024’s 21%), but nearly 50% of all deals (and 60% of all capital) went to AI/ML and Robotics startups.

We’re most definitely deep in the “AI-summer” with the quantities of capital sloshing around. AI companies are “in”, and the benefits of AI are immediately accessible to everyone.

With this level of investor conviction, let’s see how the picture advances. These numbers may pale in comparison to H2 of 2025.

Quote to Share

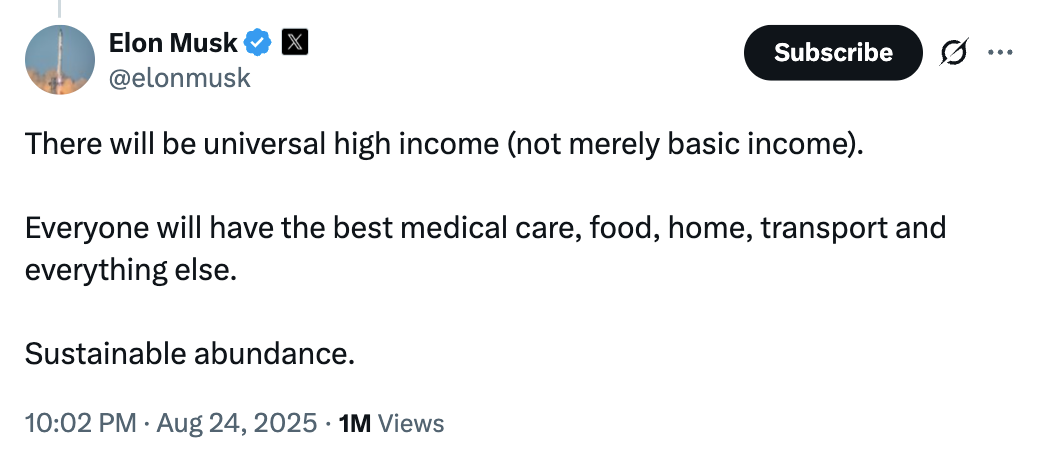

Elon Musk on post-scarcity economics:

Musk’s vision aligns closely with economist Anton Korinek's warning (that I discussed in a LinkedIn post this week) that we have 2-5 years to prepare for mass job displacement as AGI becomes capable of “essentially anything that a human worker can do.”

But Musk goes beyond traditional Universal Basic Income (UBI) proposals. His Universal High Income (UHI) echoes David Patterson's concept of Universal Equal Income (UEI).

The idea behind UHI/UEI is that it wouldn’t be what many picture—that of socialism—as it would only be implemented when AI replaces all human jobs.

Unlike UBI, which subsidises non-workers while others still work, UEI would replace wages entirely once automation is complete to avoid “free-riders”.

As Abundance authors Peter Diamandis and Steven Kotler noted, today’s Americans living below the poverty line enjoy luxuries that would have amazed the wealthiest Americans a century ago.

“Today 99 percent of Americans living below the poverty line have electricity, water, flushing toilets, and a refrigerator; 95 percent have a television; 88 percent have a telephone; 71 percent have a car; and 70 percent even have air-conditioning.”

If AI drives the cost of goods and services toward zero while dramatically increasing quality and quantity, the idea of sustainable abundance becomes economically feasible.

It’ll likely be a tough transition, and the world will see some chaos. At least until UHI/UEI becomes real.

Source: Elon Musk on X

Question to Ponder

“Google’s Gemini Flash Image 2.5 is impressive, but will it ever become a full replacement for photo editors?”

It feels like we just witnessed the ChatGPT moment for image generation this week.

But will Gemini Flash Image 2.5 (or "Nano Banana”) replace photo editors entirely? I don't think so.

Here’s why: these models are fundamentally non-deterministic. Ask the same prompt with the same image twice, and you’ll get slightly different results each time. This isn't a bug, but a natural consequence of how these models operate.

And honestly, this mirrors human creativity perfectly.

Give the same photo to two different editors, and they’ll produce completely different results. Their edits reflect their personal interpretation, creative vision, and artistic judgment. One might go bold and dramatic, another subtle and natural.

This debate reminds me of the “AI will replace programmers” argument that we've been having for years. Sure, we might need fewer programmers, but replacement? Never.

What’s happening instead is the democratisation of skills. Just as coding tools have made programming more accessible, AI image tools are making photo editing available to everyone, without requiring an expensive software license and specific tool use ability. Anyone can use natural language to “remove the background”, “increase the saturation”, or “put this image of a sombrero on this other image of my dog”.

The key differentiators will always be critical thinking, independence of thought, and clarity of judgment. Clear thinking is what separates someone who can use a tool from someone who can create magic with it.

That means your output is enhanced and multiplied by these models, not replaced by them.

Got a question about AI?

Reply to this email and I’ll pick one to answer next week 👍

💡 If you enjoyed this issue, share it with a friend.

See you next week,

Alex BanksP.S. Unitree A2 is doing endurance tests carrying 250kg.

Thank you @AlexBanks for a great post! I appreciate you very much!

This is the secret to future hope for humanity’s synergy with ai:

👇

“The key differentiators will always be critical thinking, independence of thought, and clarity of judgment. Clear thinking is what separates someone who can use a tool from someone who can create magic with it.” Alex Banks - Excerpt from your great post

That's insane. Thank you for this amazing post.