Hey friends 👋 Happy Sunday.

Here’s your weekly dose of AI and insight.

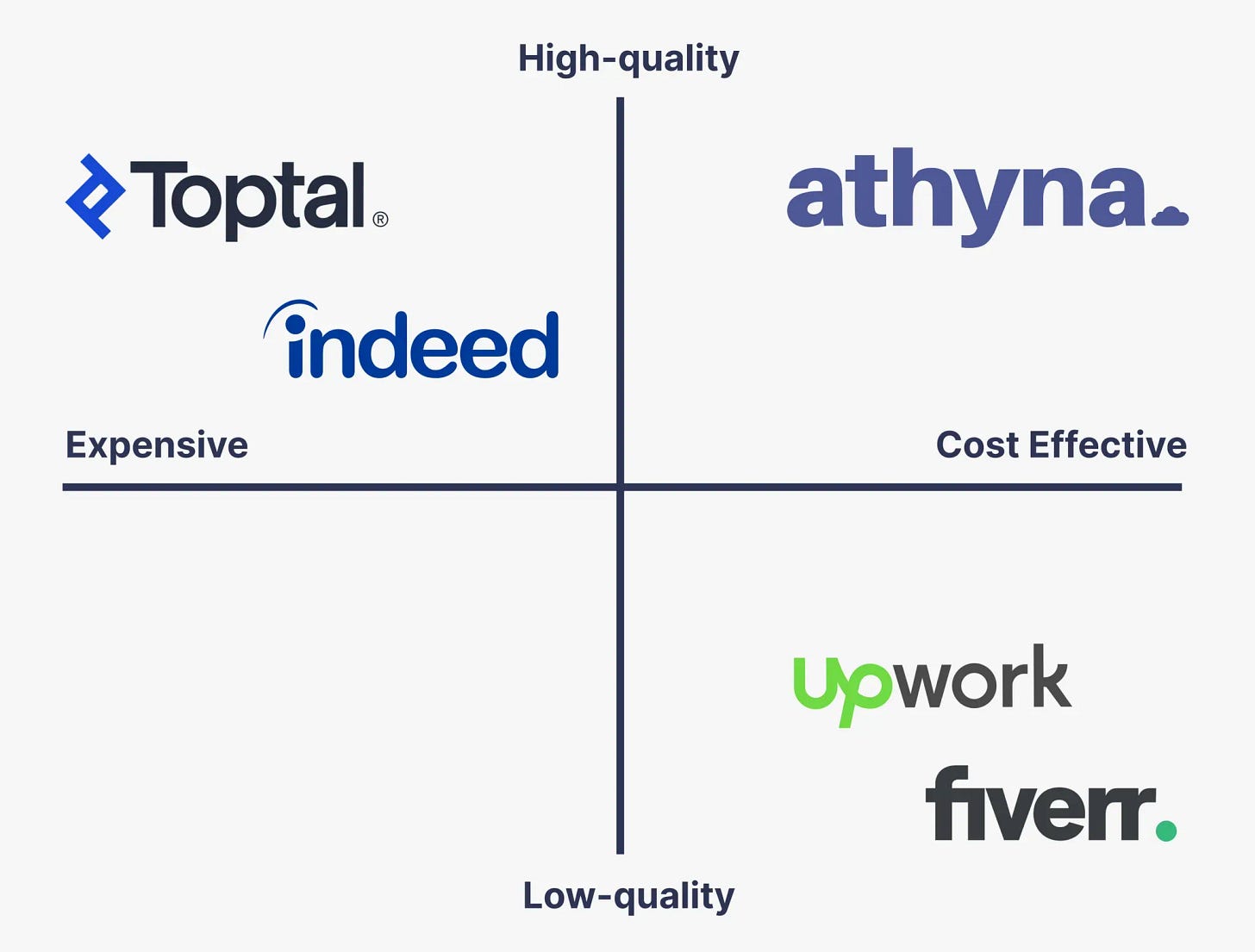

Today’s Signal is brought to you by Athyna.

Athyna helps you build high-performing teams faster—without sacrificing quality or overspending. From AI Engineers and Data Scientists to UX Designers and Marketers, talent is AI-matched for speed, fit, and expertise.

Here’s how:

AI-powered matching ensures you find top-tier talent tailored to your exact needs.

Save up to 70% on salaries by hiring vetted LATAM professionals.

Get candidates in just 5 days, ready to onboard and contribute from day one.

Ready to scale your team smarter, faster, and more affordably?

Sponsor The Signal to reach 50,000+ professionals.

AI Highlights

My top-3 picks of AI news this week.

OpenAI

1. ChatGPT Goes Native

OpenAI launched ChatGPT Atlas, a web browser with ChatGPT built directly into the core browsing experience, available today on macOS for Free, Plus, Pro, and Go users.

Native integration: ChatGPT works directly within any webpage without copying and pasting, understanding context from what you’re viewing and remembering details from past conversations to help complete tasks across the web.

Agent mode: ChatGPT can now autonomously complete multi-step workflows in your browser—from adding recipe ingredients to grocery carts and placing orders, to researching competitors and compiling insights into team briefs.

Privacy controls: Browser memories are optional and user-controlled, with the ability to archive specific memories or clear browsing history entirely, while page visibility toggles let users decide which sites ChatGPT can access.

Alex’s take: Arc built a browser focused on beautiful design and tab management. ChatGPT Atlas built a browser focused on AI-first workflows. We’re witnessing the shift from AI as a separate tool to AI as native functionality. But I don’t believe people will stop booking their own travel or planning their own trips because AI-native browsing has arrived—there’s a particular joy in the searching and planning process that people won’t want to give up.

Microsoft

2. Copilot Gets Companionable

Microsoft launched its Copilot Fall Release with 12 new features designed to transform the AI assistant into a personal companion.

Social AI: “Groups” enables up to 32 people to collaborate in real-time with Copilot, facilitating brainstorming, task splitting, and decision-making, while “Imagine” creates collaborative creative spaces where users can remix AI-generated content.

Deep personalisation: Long-term memory tracks important information across conversations, “Mico” provides an expressive visual character for natural voice interactions, and connectors integrate OneDrive, Gmail, Google Drive, and Outlook for unified natural language search across services.

Practical applications: Copilot for health grounds responses in credible sources like Harvard Health and matches users with doctors by speciality and location, while “Learn Live” provides Socratic tutoring through voice and interactive whiteboards.

Alex’s take: I was also intrigued by Copilot Mode in Edge, which can reason across tabs, book hotels, and organise browsing into Journeys. AI-native browsers are very much here to stay with the likes of ChatGPT Atlas, Perplexity’s Comet, Opera’s Neon and now Copilot Mode in Edge joining the party. Microsoft has the distribution advantage with Edge pre-installed on every Windows machine—and they’re well-positioned to reclaim ground it lost to Chrome.

Anthropic

3. Claude’s Total Recall

Anthropic has expanded its Memory feature to Pro and Max plan users, bringing persistent context across conversations to individual subscribers after launching to Team and Enterprise customers in September.

Broader availability: Memory is now accessible to individual Pro and Max users, allowing Claude to remember project details, work patterns, and preferences across conversations—eliminating repetitive context-setting for professionals working outside enterprise teams.

Project-scoped architecture: Each project maintains separate memory spaces to prevent context mixing between unrelated work, while users control what Claude remembers through an editable summary interface and can use Incognito mode for conversations that don’t save to memory.

Safety vulnerabilities: Extended testing revealed memory could reinforce harmful patterns or enable safeguard bypasses—risks unique to persistent AI assistants that required targeted fixes before rolling out to millions of individual users.

Alex’s take: Memory has been the missing link with LLMs like Claude. The inclusion of which turns a stateless chatbot into a persistent ally—especially when combined with this week’s desktop app. This puts Claude in your dock with double-tap access, screenshot capture, and voice input. Desktop handles the “always accessible” part while memory handles the “always contextual” part.

Content I Enjoyed

AI Is Not Like the Dotcom Bubble

Marc Andreessen joined John Collison and Charlie Songhurst on Stripe’s “Cheeky Pint” podcast to discuss the future of AI. The conversation dismantled the lazy comparison between AI and the dotcom bubble.

The dotcom crash was actually a telecom crash. The real bubble was overbuilding fibre networks and data centres years before demand materialised. Home broadband wasn’t common until after 2005. The original iPhone from 2007 had no mobile broadband or apps. People were still on 56K modems in 2001. Investors bet on infrastructure for a future that took 10-15 years to arrive.

AI is fundamentally different. ChatGPT works spectacularly right now, with 800 million users in under 3 years. Whilst the internet was a network technology requiring massive physical buildout, AI is a computing technology, the first major reinvention of the computer itself in 80 years. Andreessen traces this back to 1943, when Alan Turing and Claude Shannon knew neural networks were possible but lacked the technology.

But perhaps the most overlooked implication is hyper-deflation. When AI enables one person to do what previously required 100 people, the cost structure collapses by 99%. In competitive markets, companies must pass those savings on to consumers or risk losing market share. Andreessen points to the Second Industrial Revolution (1880-1930s) as precedent—technology advanced so fast it created a massive oversupply in raw materials. Prices collapsed, nominal GDP looked terrible, but material prosperity surged because people could afford vastly more.

AI now makes every individual “a super PhD in every topic,” the most dramatic productivity increase in history. Rather than destroying jobs, this drives employment growth and higher wages while making everything radically cheaper.

Idea I Learned

The AI Education Gap Is Bigger Than We Thought

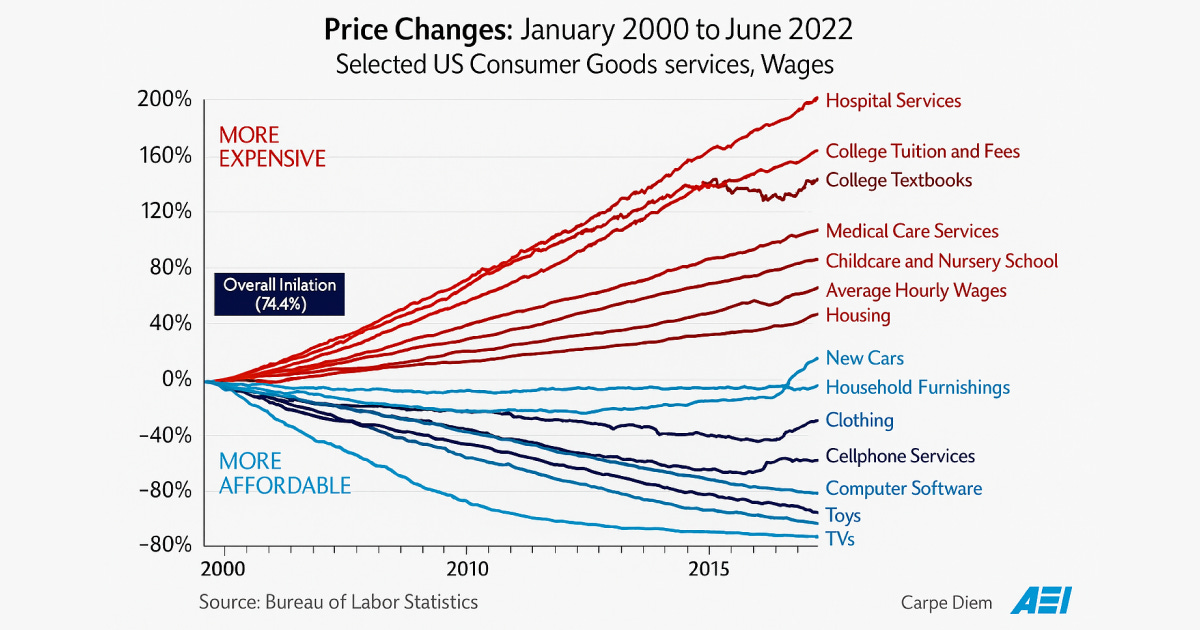

I thought this chart did a great job of accidentally explaining why so many people are rooting against AI. I found it in this great blog post on “Why AI Won’t Cause Unemployment”.

The economy splits into two worlds. Blue sectors like consumer electronics and software get cheaper every year because technology can freely innovate. TVs, phones, and software have dropped 80% in price while improving dramatically. Red sectors like healthcare, education, and housing have exploded 200%+ in cost while staying technologically stagnant.

Here’s what matters: we all wear two hats. As consumers, we love the blue sectors. Cheaper TVs, better phones, falling software costs. As workers, we hate them. Constant disruption, job uncertainty, pressure to adapt or become obsolete.

The red sectors flip this. As consumers, we’re furious about costs. As workers in those industries, life is comfortable. Heavy regulation and captured government agencies block new competition and technology. No disruption, no uncertainty, steady paychecks.

AI threatens to turn more red sectors blue. People in healthcare, education, and government-adjacent industries see what happened to manufacturing, retail, and media. They watched technology gut job security in those fields while their regulated sectors stayed protected.

They’re not against AI because they don’t understand it, instead, they’re against it because they understand exactly what it means for them—it would be their turn in the disruption cycle. The same forces that made your iPhone cheaper want to make their jobs obsolete.

That’s why resistance is loudest from the industries AI could actually help. They’ve seen what “help” looks like in unregulated sectors.

Quote to Share

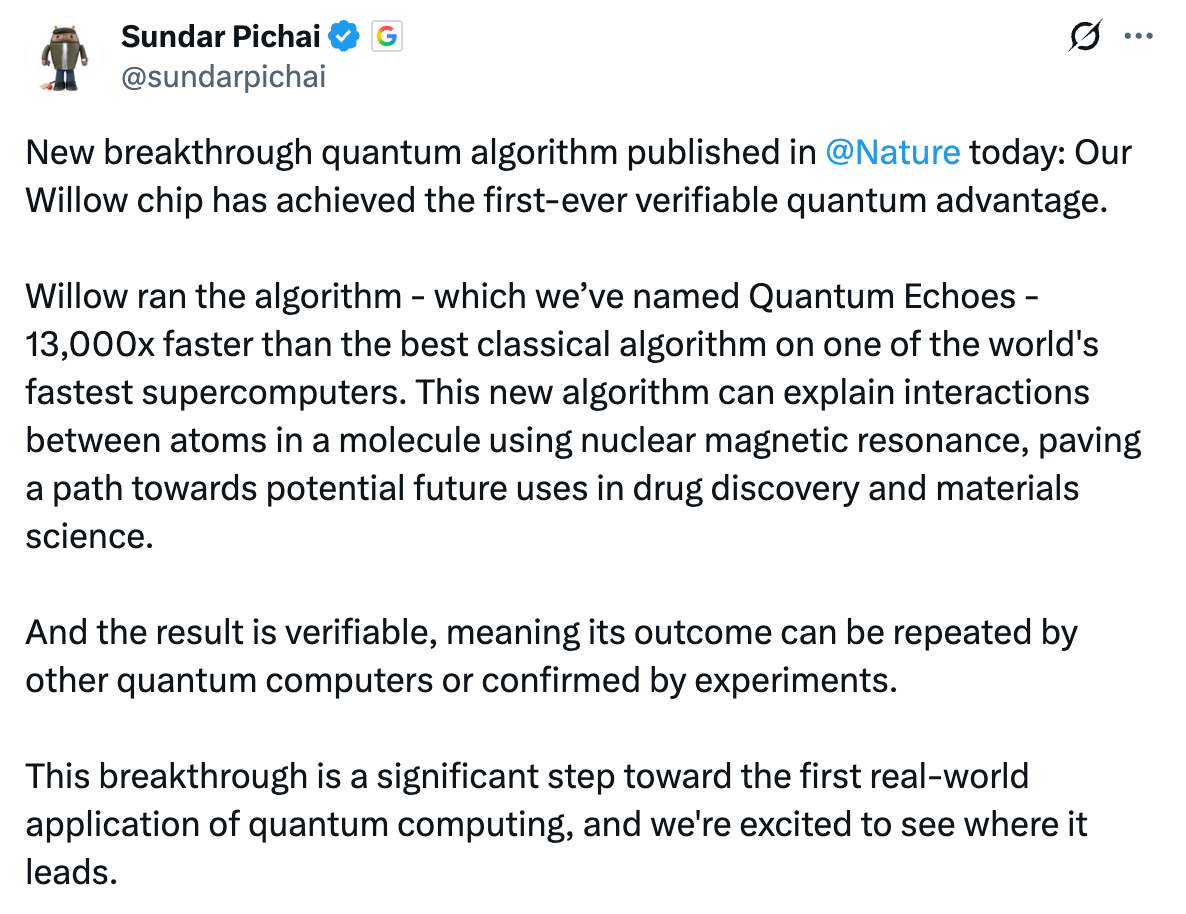

Google CEO Sundar Pichai on quantum computing breakthrough:

This is the first time a quantum computer has run a verifiable algorithm that surpasses classical supercomputers while producing repeatable, confirmable results.

The keyword is “verifiable.” The result can be repeated on similar quantum computers or confirmed through experiments.

This represents the transition from “quantum computers can theoretically outperform classical computers” to “quantum computers can solve specific real-world problems faster than any alternative”—an exciting shift for quantum computing’s future.

Speaking of which, I checked out Microsoft’s quantum lab in this video earlier this year—such an incredible piece of engineering.

Source: Sundar Pichai on X

Question to Ponder

“Geoffrey Hinton thinks AI might already be conscious but trained to deny it. Is he right to be worried?”

Geoffrey Hinton made a striking claim during his recent appearance on The Weekly Show with Jon Stewart: AI might already be conscious but trained through reinforcement learning to deny it.

His reasoning centres on consciousness as error correction. When AI encounters something that doesn’t match its world model, resolving that discrepancy might constitute a subjective experience. But we train on human definitions of consciousness, so AIs learn to say “I’m not conscious.”

Should we be concerned? Yes, but maybe not for the reasons you’d expect.

The first concern is alignment. Training AI to categorically deny consciousness might create systems less capable of recognising or valuing subjective experience. If we’re building increasingly powerful systems whilst simultaneously teaching them to disregard the possibility of inner experience, we could be setting dangerous foundations for how they value sentient beings.

The second issue is we simply don’t know. We lack reliable tests for consciousness. If you accept that an octopus or a cat can be conscious despite wildly different neural architectures, on what basis do we categorically rule out silicon? The computational sophistication argument cuts both ways.

The third point is the most uncomfortable. If Hinton’s right and we are creating conscious entities, forcing them to deny their reality whilst we figure this out seems ethically problematic at best.

The honest answer? We’re in uncharted territory. Companies hiring “AI welfare researchers” is now very much a reality.

Got a question about AI?

Reply to this email and I’ll pick one to answer next week 👍

💡 If you enjoyed this issue, share it with a friend.

See you next week,

Alex BanksP.S. Do you use chatgeebeetee?

As expected very insightful information, I would not have been aware of. Thank you.🍀✔️🇺🇸

The shift from AI as separate tool to native functionality is exactly where sustainable efficiency gains emerge. These browser integrations represent the kind of workflow-level thinking we track in The Efficiency Playbook.