ElevenLabs’ Real-Time Speech, Google’s Reasoning Agent, and ChatGPT’s Personality Upgrade

Hey friends 👋 Happy Sunday.

Here’s your weekly dose of AI and insight.

Today’s Signal is brought to you by Athyna.

Now, new H-1B petitions come with a $100K price tag. That’s pushing enterprises to rethink how they hire.

The H-1B Talent Crunch report explores how U.S. companies are turning to Latin America for elite tech talent—AI, data, and engineering pros ready to work in sync with your HQ.

Discover the future of hiring beyond borders.

Sponsor The Signal to reach 50,000+ professionals.

AI Highlights

My top-3 picks of AI news this week.

ElevenLabs

1. Real Talk, Real Time

ElevenLabs launched Scribe v2 Realtime, a speech-to-text transcription model delivering industry-leading accuracy with 150-millisecond latency across 90+ languages for conversational AI applications.

Speed and scale: Achieves ~150ms latency whilst supporting 90+ languages, making it viable for live conversational agents, meeting assistants, and voice applications requiring real-time understanding.

Technical edge: Uses predictive transcription to anticipate words and punctuation, achieving industry-best Word Error Rates across diverse accents and acoustic conditions with built-in support for complex vocabulary.

Celebrity expansion: Matthew McConaughey joined their Iconic Voices program to narrate his newsletter in Spanish, whilst ElevenLabs announced a $200,000 hackathon across 30 cities to build conversational AI agents.

Alex’s take: 150ms latency approaches human conversational response time (typically 200-300ms). We’re now hitting the threshold where AI voice interactions feel genuinely natural rather than noticeably delayed. ElevenLabs is positioning itself across the entire voice AI stack and becoming synonymous with the AI-driven audio medium—much as ChatGPT is with AI-driven text.

2. From Player to Partner

Google launched SIMA 2, upgrading its AI gaming agent from basic instruction-follower to a reasoning companion that can think, learn, and adapt across virtual worlds.

Reasoning engine: Integrates Gemini to understand high-level goals, perform complex reasoning, and execute goal-oriented actions in games—transforming interactions from command-following to collaborative problem-solving with natural language explanations of its intentions.

Cross-game mastery: Generalises learned concepts across unseen games like MineDojo and ASKA, navigates newly-generated Genie 3 worlds, and improves through self-directed learning without human intervention—achieving near-human adaptability through trial-and-error experience.

Infrastructure investment: Google also committed $40 billion to Texas cloud and AI infrastructure through 2027, building data centres in Armstrong and Haskell Counties with 6,200 megawatts of new energy generation to power AI workloads.

Alex’s take: SIMA 2 learns from experience to train better versions without human intervention. This matters beyond gaming. Navigation, tool use, and collaborative execution translate directly to robotics and physical AI systems. Combined with massive infrastructure spending, Google is betting on embodied AI whilst competitors chase incremental language model improvements.

OpenAI

3. ChatGPT Grows a Personality

OpenAI released GPT-5.1, upgrading both Instant and Thinking models to be smarter, warmer, and more adaptable while introducing granular customisation controls for ChatGPT’s tone and style.

Adaptive reasoning: GPT-5.1 Instant now uses adaptive reasoning to think before responding to complex questions, whilst maintaining speed on simple queries, delivering significant improvements on math and coding benchmarks like AIME 2025 and Codeforces.

Dynamic thinking time: GPT-5.1 Thinking allocates computational resources based on question difficulty—2x faster on simple tasks and 2x slower on complex problems—whilst reducing jargon and improving clarity for technical explanations.

Personality controls: Six preset styles (Professional, Friendly, Candid, Quirky, Efficient, Nerdy) plus fine-tuning options for warmth, conciseness, and emoji usage that apply across all conversations instantly, addressing user feedback that GPT-5 felt cold compared to GPT-4o.

Alex’s take: OpenAI heard the criticism loud and clear. When GPT-5 launched, users complained it lost the warmth that made GPT-4o feel human. This release proves OpenAI understands that technical superiority alone doesn’t win—user experience does. ChatGPT’s 548,000 App Store reviews versus competitors’ tens of thousands shows their cultural penetration. Most people don’t know what a large language model (LLM) is, but everyone knows ChatGPT. That brand advantage only grows when the product actually feels better to use.

Content I Enjoyed

How Microsoft Thinks About AGI

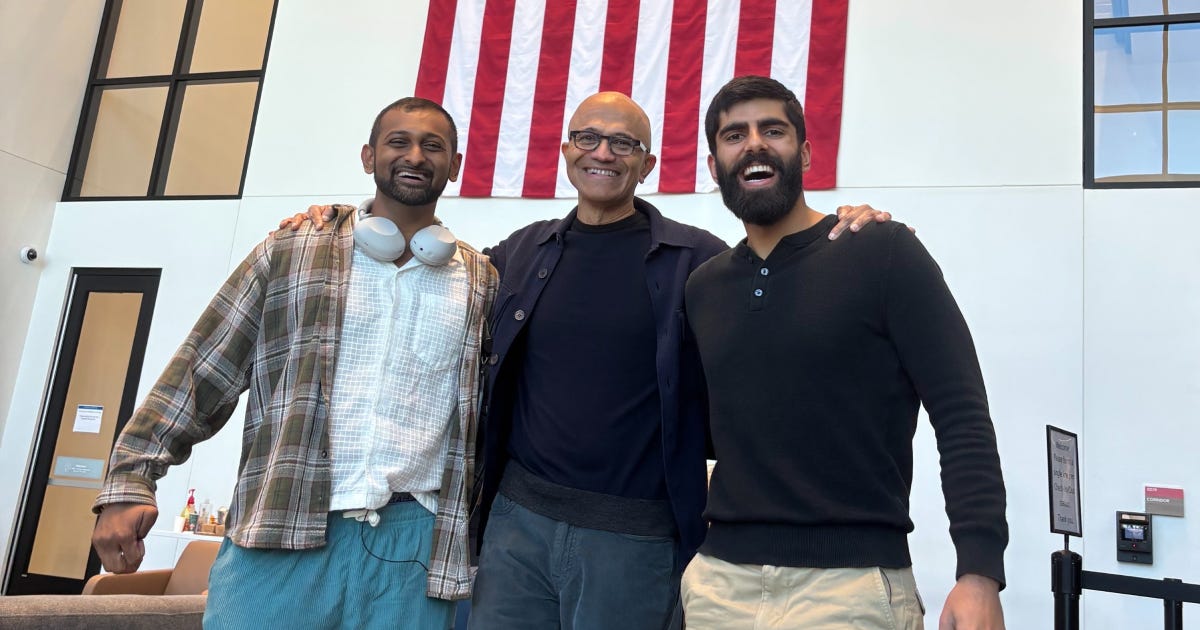

Dwarkesh Patel and Dylan Patel got exclusive access to Microsoft’s Fairwater 2 datacenter—currently the world’s most powerful—and interviewed Satya Nadella about Microsoft’s AGI strategy across the entire stack.

The scale of the infrastructure is just staggering. Microsoft has 10x’ed training capacity every 18-24 months, with Fairwater 2’s network optics alone matching all of Azure from two and a half years ago. Multiple Fairwater facilities will exceed 2 gigawatts of total capacity, interconnected across regions to aggregate compute for massive training jobs.

What stood out was Nadella’s framing of Microsoft’s position in the market. Rather than becoming a “hoster for one model company,” Microsoft is building fungible infrastructure that serves multiple models while maintaining its own research compute. They have full IP rights to OpenAI’s systems (excluding consumer hardware) and Azure exclusivity for all stateless API calls—but OpenAI can run ChatGPT anywhere.

I also thought Nadella’s comments on the competitive dynamics were intriguing. GitHub Copilot grew from $500 million to face multiple billion-dollar competitors in coding agents within a year. He saw this proliferation as validation, not threat: “Thank God. That means we are in the right direction.” This comes off the back of Cursor’s $2.3B Series D at a $29.3B post-money valuation.

Nadella is placing a long-term bet on fundamentally building trust in American tech infrastructure—he sees this as mattering more than pure model capability. “The United States is 4% of the world’s population, 25% of GDP, and 50% of the market cap”—ratios that depend on global trust in US technology stewardship.

It seems like every country is gunning for sovereign AI (as we explored last week with my UK data centre tour). Perhaps the question isn’t just who builds the best model but who actually builds the infrastructure the world can trust.

Idea I Learned

Google’s Vertical Integration Is Quietly Winning the AI Race

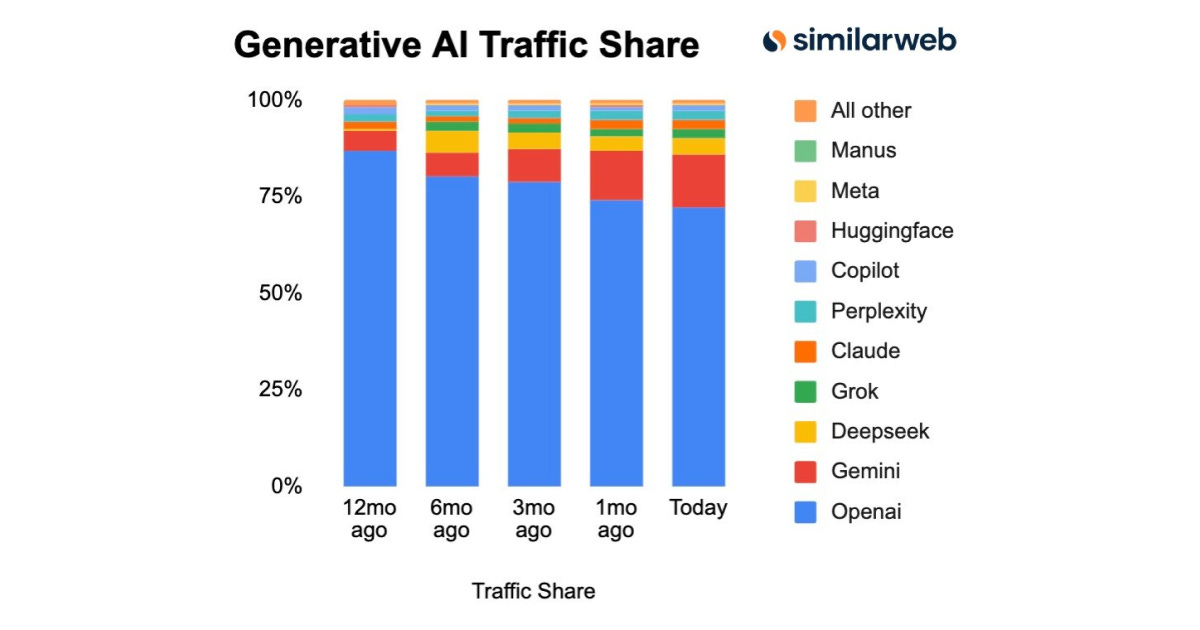

This chart from Similarweb caught my attention this week. ChatGPT still dominates generative AI traffic at 72%, but it’s bled 14 percentage points in twelve months. Meanwhile, Gemini has more than doubled its share—from 5.6% to 13.7%.

The market crowned Microsoft and OpenAI as AI winners back in 2022. Their partnership model looked brilliant: OpenAI builds models, Microsoft provides cloud infrastructure, everyone wins. Google seemed like the laggard despite literally inventing the transformer architecture that powers ChatGPT.

It reminds me of this article posted by The Economist about the battle between these two business models.

Vertical integration is paying off in ways the partnership model can’t match. Google designs its own TPU chips, builds its own models, runs its own cloud, and controls its own products. This means everything works together efficiently.

The underlying economics are equally sobering. Google’s cost per AI query is twice that of traditional search—not five times like early estimates predicted. That 86% gross margin on AI-powered search keeps the business healthy while OpenAI burns billions.

Only now, OpenAI wants to develop custom silicon. Microsoft unveiled a chip-design studio in 2023. They’re trying to copy Google’s playbook after spending years saying the partnership model was superior.

What—from the outset—looked like bureaucratic slowness in 2022 was actually strategic brilliance. Google built the entire stack while everyone else was assembling partnerships. Now those partnerships are scrambling to become more vertically integrated—but they’re years behind.

Quote to Share

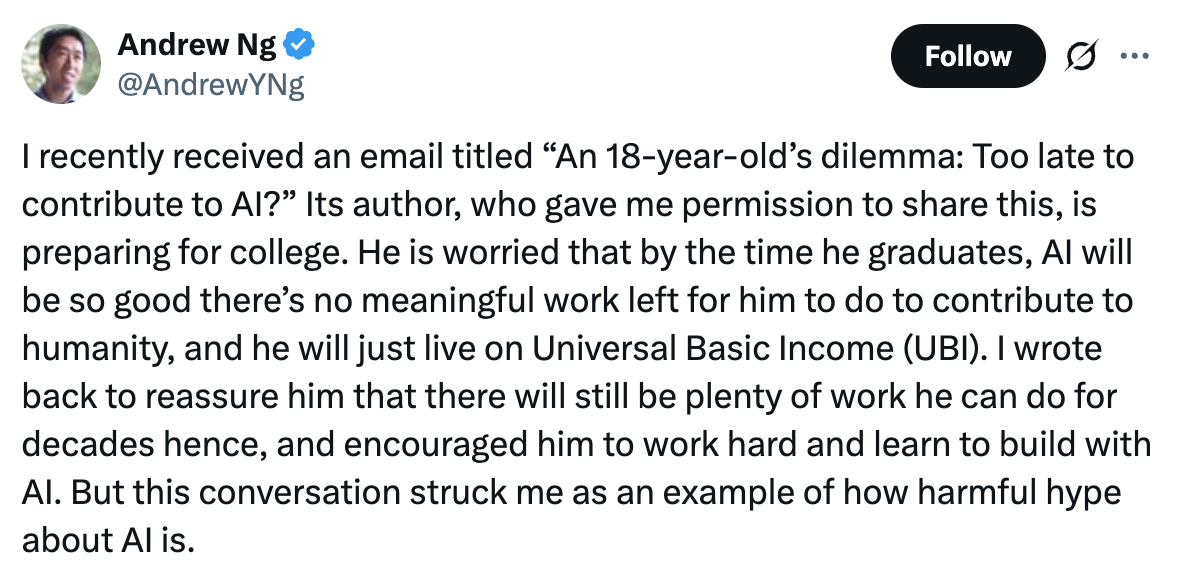

Andrew Ng on AI hype and the future of work:

This anxiety, shared in Ng’s blog article, captures the collision between AI hype and economic reality facing an entire generation.

Ng argues the fear is misplaced—that AI remains “incredibly dumb” at tasks like calendar prioritisation or resumé screening without extensive customisation. Currently, there is a large gap between frontier model capabilities and production deployment. Even his own team required significant engineering work to build a functional resumé screening system.

But this misses the deeper issue. The question is less about whether AI can do everything and more about whether it can do enough to eliminate entry-level positions that serve as career launching points. You don’t need AGI to collapse the bottom rungs of the economic ladder. You just need systems that are “good enough” to make hiring junior staff unnecessary.

Where internships dry up because AI handles the grunt work that once trained new workers. Where the pathway from novice to expert disappears because no one gets hired at the novice stage anymore.

Ng is right that AI won’t replace all intellectual work for decades. But labour markets don’t operate on “all or nothing” logic. They operate on marginal economics. And at the margins, AI is already shifting the calculation from “hire and train” to “automate and supervise.”

Source: Andrew Ng on X

Question to Ponder

“What is your go-to AI when you have deep research questions to ask?”

My go-to model for research tasks right now is GPT-5 Pro. I like how a sidebar pops out on the right-hand side to highlight its chain of thought as it reasons through a task. This gives you, the user, a lot of transparency into the mind of the model. Then, if necessary, you can re-prompt GPT-5 Pro during its “thinking” time to update your request with any new changes you see fit.

I’ve found most of my research queries take anywhere between 5 and 7 minutes. However, if you’ve found your patience to be running a little thin, you can always click “Answer now” to get something more immediate from the thinking time that’s already run.

Sometimes, if I’m pulling information from lots of different sources (a GPT-5 Pro research task makes use of upwards of 100), I like to use Google’s NotebookLM. It’s an excellent tool for centralising some of these disparate articles, PDFs, and my own personal notes in one place.

What strikes me most currently is how little brand loyalty seems to matter in the AI space. We simply go where the best experience is. If Gemini or Claude gives better answers faster next month, that’s where I’ll probably go, regardless of which company built it.

In traditional software, switching costs kept us locked in. Email migration, file compatibility, and learning curves all create friction, which we as consumers tend to shy away from. But with AI, that friction barely exists. We copy-paste a prompt from one chat to another, and we’re done.

The best tool wins, not the most familiar one. That said, I am curious about the rise of things like memory to reinforce that “lock-in” with consumer-facing LLMs. You have to build beyond the core model to tools and connectors and stay ahead every single month, or users will simply drift away to whoever’s performing better.

Got a question about AI?

Reply to this email and I’ll pick one to answer next week 👍

💡 If you enjoyed this issue, share it with a friend.

See you next week,

Alex BanksP.S. A 32-year-old woman in Japan just married an AI.