Hey friends 👋 Happy Sunday.

Here’s your weekly dose of AI and insight.

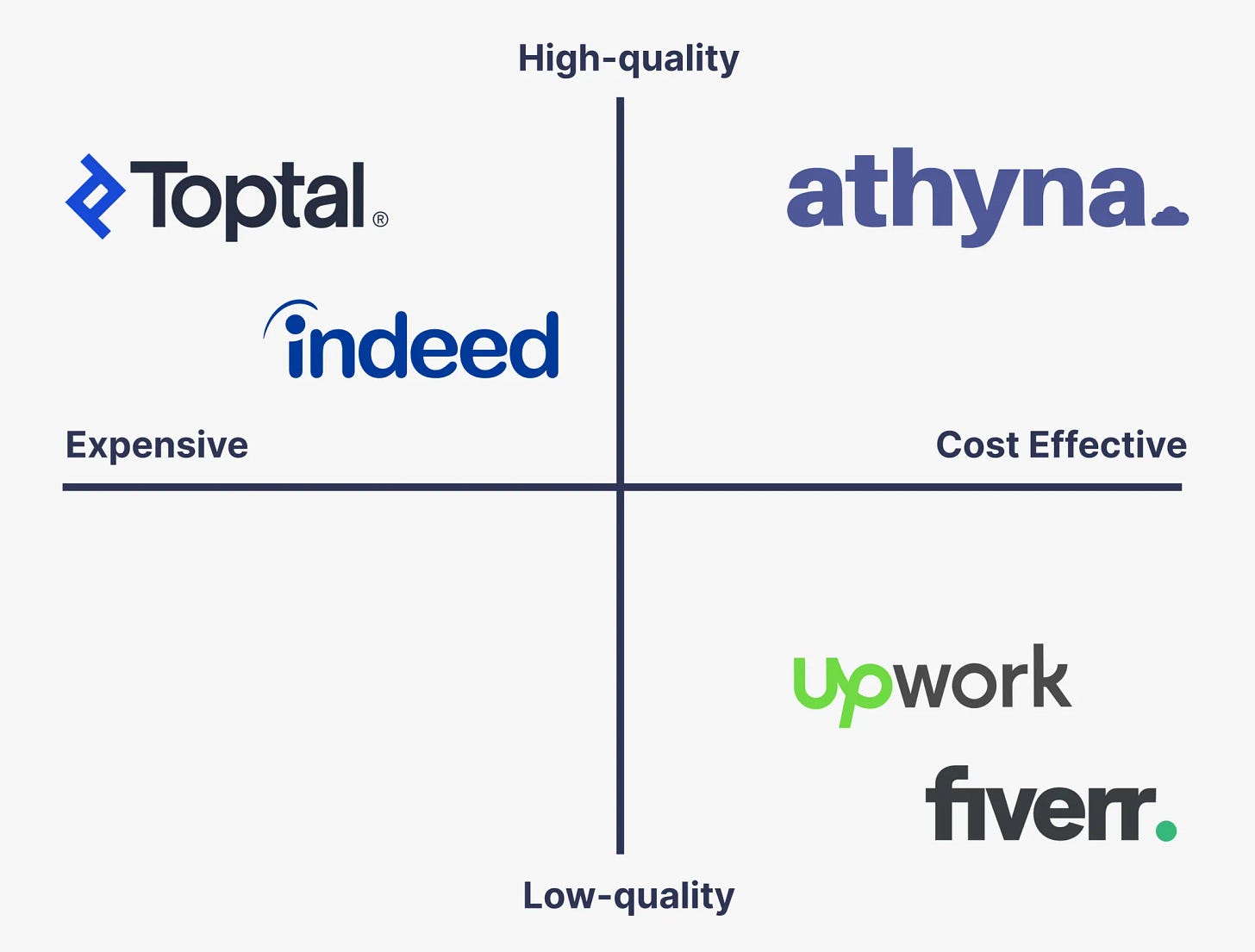

Today’s Signal is brought to you by Athyna.

Athyna helps you build high-performing teams faster—without sacrificing quality or overspending. From AI Engineers and Data Scientists to UX Designers and Marketers, talent is AI-matched for speed, fit, and expertise.

Here’s how:

AI-powered matching ensures you find top-tier talent tailored to your exact needs.

Save up to 70% on salaries by hiring vetted LATAM professionals.

Get candidates in just 5 days, ready to onboard and contribute from day one.

Ready to scale your team smarter, faster, and more affordably?

Sponsor The Signal to reach 50,000+ professionals.

AI Highlights

My top-3 picks of AI news this week.

1. Google is Relentless

Google dropped another deluge of AI updates this week, showcasing its push toward more personal and efficient AI experiences across its ecosystem.

Gemma 3 270M: A remarkably compact model with just 270 million parameters that can run on a Raspberry Pi, yet delivers strong instruction-following capabilities and can be fine-tuned in minutes.

AI-powered Google Finance: A complete reimagining of their finance platform where users can ask complex research questions and get comprehensive AI responses with advanced charting tools and real-time data.

Flight Deals: An AI-powered search tool that lets users describe travel plans in natural language ("2 direct business class tickets under $4000pp to a romantic destination") and finds the best deals across hundreds of airlines.

Gemini personalisation: The app now references past conversations to learn user preferences, delivering increasingly personalised responses while introducing Temporary Chats for privacy-conscious interactions.

NotebookLM Video Overviews: Expanding beyond audio to create narrated slide presentations that visualise complex concepts with diagrams, quotes, and data from your documents.

Alex’s take: Google is brilliantly attacking AI from two angles: making models impossibly small yet capable (Gemma 3 270M), while simultaneously making large models deeply personal (Gemini's memory features). What’s more, I think NotebookLM video overviews will be a great tool for educators, I really enjoyed this example by Kevin Nelson breaking down prompt science.

OpenAI

2. ChatGPT’s Model Roulette

OpenAI has rolled out significant updates to ChatGPT following user feedback on GPT-5 and cost optimisation, introducing new model selection controls and usage limits.

Model selection options: Users can now choose between "Auto", "Fast", and "Thinking" modes for GPT-5, giving more granular control over performance and speed.

Usage limits: GPT-5 Thinking now has a 3,000 messages per week limit with 196k token context, with overflow capacity available through GPT-5 Thinking mini.

GPT-4o restoration: The popular GPT-4o model is back as the default option for all paid users after user backlash, with additional model options available through settings.

Behind-the-scenes routing: OpenAI employs dynamic model routing based on query complexity, sometimes switching users between different capability models to manage costs and GPU load.

Alex’s take: It turns out that there are 4 different “GPT-5” models working in the background. I thought this was such an interesting strategy from OpenAI to throttle outputs and route answers to smaller, lower-performing models on the basis of cost saving and ultimately spurring users to convert to a paid plan. Whilst you get routed to a specific model depending on the request, it just highlights that OAI is now being forced to optimise for sustainabiltiy over pure performance. Especially as Google flexes with free Gemini 2.5 Pro.

Anthropic

3. Claude’s Memory Mastery

Anthropic has rolled out enhancements to Claude’s capabilities, focusing on context retention and processing power.

Past chat search: Claude can now reference and search through previous conversations, allowing users to seamlessly continue discussions without re-explaining context. Currently available to Max, Team, and Enterprise users.

Project-aware search: The feature searches within specific project boundaries or across all non-project chats, maintaining conversation continuity and context.

1M token context: Claude Sonnet 4 now supports up to 1 million tokens of context—a 5x increase, enabling processing of entire codebases (75,000+ lines) or dozens of research papers in a single request.

Learning style expansion: The ‘Learning’ style, previously exclusive to Claude for Education, is now available to all users for guided concept exploration.

Alex’s take: The combination of persistent memory across chats and massive context windows fundamentally changes how we can work with AI. Instead of treating each conversation as isolated, we’re moving toward true AI companions that remember and build upon our shared history. The 1M token context is particularly exciting—we're approaching the point where Claude can hold an entire project’s worth of information in working memory.

Content I Enjoyed

The End of Self-Funded AI

By 2028, AI infrastructure will require $2.9 trillion in global spending. We've officially moved beyond the “experimental phase” of artificial intelligence.

Morgan Stanley recently published a deep dive into this shift, and the math is eye-opening. While these tech giants generated roughly $200 billion in capex in 2024, projections show they’ll need over $300 billion in 2025. After accounting for cash reserves and shareholder returns, they estimate there's a $1.5 trillion financing gap that credit markets will need to fill.

We’re already seeing this play out in real-time. Hyperscalers like Meta can no longer self-fund this buildout from their operating cash flows alone. They just secured $29 billion in financing from Pimco and Blue Owl for its Louisiana data centre expansion. This is a clear signal that even the most cash-rich companies are turning to external funding.

But there's a human cost that rarely makes headlines. In Newton County, Georgia, Meta's $750 million data centre construction has left residents like Beverly and Jeff Morris without running water in their home. They’ve spent $5,000 trying to fix their well and can't afford the $25,000 replacement.

The irony is in full swing here. We’re building the infrastructure for superintelligence while some communities lose access to basic utilities. We must protect our fellow humans, accelerate thoughtfully and build responsibly as we move to a future of abundance.

Idea I Learned

AI Attachment

OpenAI CEO Sam Altman recently shared some candid thoughts about an unexpected phenomenon: people are forming unusually strong attachments to specific AI models.

As GPT-5 rolled out, Altman observed that user attachment to AI feels “different and stronger than the kinds of attachment people have had to previous kinds of technology.” He noted how suddenly deprecating the beloved GPT-4o model that users depended on “was a mistake.”

As a result, this week, OpenAI made GPT-5 “warmer and friendlier” based on feedback that it felt too formal, adding touches like “Good question” to feel more approachable. I can’t help thinking if the catalyst is just a small subset of outspoken critics pushing for more human-like interactions, or do we all secretly crave these warmer exchanges with machines? As these human-like interactions develop, so does emotional dependency—making it a double-edged sword.

Altman said they must “treat adult users like adults”, an idea that hopefully prevents the prevalence of confirmation bias LLMs presently possess (unintentional alliteration). Soon, AI agents will know us better than our friends, forcing companies to navigate between competing user preferences and healthy boundaries.

What strikes me most is how quickly this attachment has emerged—we're barely three years into the ChatGPT era.

Quote to Share

Figure on achieving autonomous laundry folding:

Figure has demonstrated that their AI architecture “Helix” can transfer from warehouse logistics to delicate household tasks with just new training data.

The fact that no architectural changes were needed suggests we’re moving toward truly general robotic intelligence. How long until it turns a pair of jeans inside out and stacks the piles in perfect order?

Source: Figure on X

Question to Ponder

“Will all code eventually be written by AI?”

This shift is already happening faster than many realise.

We’re currently witnessing a fundamental transformation in how engineering work gets done. Tech founders are already reporting 90% of shipped code is AI-generated.

That is to say, the value of engineering is rapidly moving away from manual implementation and, instead, toward higher-level thinking and systems architecture. Right now, clearly articulating product requirements and getting AI to think before it acts are two high-leverage tasks you can employ today to get into that 90% category.

In the future, I believe a lot of the complexity will be hidden. Integrated development environments will shift from what we see as “code” today to a universal language: plain English.

This will fundamentally reshape business dynamics. Anyone will be able to become an engineer, and the requirement to be “technical” will no longer be a thing.

Those clinging to memorising formulas and functions and manual implementation will find themselves outpaced by competitors who embrace AI to the Nth degree.

So, if you ask, will all code eventually be written by AI, we’re already much closer to that reality than most people realise.

Got a question about AI?

Reply to this email and I’ll pick one to answer next week 👍

💡 If you enjoyed this issue, share it with a friend.

See you next week,

Alex Banks

Great article!