Google’s Gemini 3 Dominates, Microsoft Agents Take Office, and ElevenLabs’ Platform Play

Hey friends 👋 Happy Sunday.

Each week, I send The Signal to give you essential AI news, breakdowns, and insights—completely free. You can now join our community for in-depth AI workflows, insider announcements, and exclusive group chat access.

AI Highlights

My top-3 picks of AI news this week.

1. Gemini 3 Dominates

Google released a wave of major updates this week, flooding the AI ecosystem with new models, creative tools, and development platforms across its entire product ecosystem.

Model dominance: Gemini 3 Pro tops LMArena at 1501 Elo and achieves breakthrough scores on PhD-level reasoning benchmarks (91.9% on GPQA Diamond, 37.5% on Humanity’s Last Exam). The model introduces “generative interfaces” that dynamically create custom UIs from visual layouts to interactive tools, generated entirely on-the-fly based on user prompts.

Creative firepower: Nano Banana Pro (Gemini 3 Pro Image) delivers state-of-the-art image generation with unprecedented text rendering capabilities across multiple languages, studio-quality creative controls (lighting, camera angles, colour grading), and the ability to maintain consistency across up to 14 input images.

Developer transformation: Google Antigravity reimagines the IDE as an agent-first development platform where AI operates as an active partner rather than a tool. Powered by Gemini 3’s agentic coding capabilities (54.2% on Terminal-Bench 2.0, 76.2% on SWE-bench Verified), agents autonomously plan and execute complex end-to-end software tasks with direct access to the editor, terminal, and browser.

Alex’s take: The simultaneous launch of Gemini 3 across consumer, creative, and developer surfaces signals Google’s attempt to dominate the entire AI stack. What I love about tools like Nano Banana Pro is how immediately accessible they are and, as a result, how quickly people are finding practical applications: 2D blueprints to 3D renders, landmark infographics, and visualising specific coordinates are amongst some of my favourites.

Microsoft

2. Microsoft Agents Take Office

Microsoft announced agent capabilities across its entire productivity suite at Ignite 2025, positioning itself as the infrastructure provider for enterprise AI.

Office document agents: Word, Excel, and PowerPoint agents use reasoning models to create documents, spreadsheets, and presentations directly in Copilot chat, then seamlessly transition to in-app editing with Agent Mode for deeper customisation and collaboration.

Enterprise AI browser: Edge for Business with Copilot Mode becomes the world’s first secure enterprise AI browser, enabling multi-step workflows across tabs with full enterprise security and compliance controls.

Copilot Notebooks: AI-powered workspace combining notes, M365 files, and Copilot chats for grounded question-answering and insight generation—Microsoft’s answer to Google’s NotebookLM, natively integrated into the Microsoft 365 ecosystem.

Alex’s take: Work IQ and Agent 365 form the infrastructure layer, whilst Office agents handle execution. This is the clearest articulation yet of how productivity software transforms from tool to teammate. The real unlock is enterprise grounding through Microsoft Graph—agents operate with full context of your organisation’s data, permissions, and workflows. Whilst Google has NotebookLM, Microsoft has enterprise distribution. For those interested, I did a video breakdown of PowerPoint agent this week.

ElevenLabs

3. ElevenLabs’ Platform Play

ElevenLabs, known for AI voice generation, has launched Image & Video (Beta), integrating leading models like Veo, Sora, Kling, and others into a unified platform alongside their audio capabilities.

Model aggregation: The platform brings together top-tier image models (Nano Banana, Flux Kontext, GPT Image, Seedream) and video models (Veo, Sora, Kling, Wan, Seedance) with advanced features like upscaling and lipsync.

Complete workflow: Users can generate visuals, add voiceovers using ElevenLabs’ voice library, compose background music, layer sound effects, and export polished content all within Studio’s unified timeline.

Target positioning: Built specifically for creators, marketers, and content teams who need to move from concept to final export without switching between multiple tools or platforms.

Alex’s take: ElevenLabs is becoming a popular recurrence amongst the AI-highlight section of this newsletter. They’re making a bet that workflow integration matters more than pure model ownership. By aggregating the best models rather than just building their own, they’re positioning themselves as the control point for a lot of AI-generated content creation. I’m a big believer that as we see AI models commoditise, the real value shifts to whoever owns the creative workflow.

Content I Enjoyed

Rough Vibes at OpenAI

A leaked internal memo from OpenAI CEO Sam Altman has exposed cracks in what was once AI’s most dominant player. Obtained by The Information, Altman acknowledges that Google’s recent AI progress could “create some temporary economic headwinds” for OpenAI.

Google’s momentum in AI, particularly with their recent model releases of Gemini 3 and Nano Banana Pro, is forcing OpenAI to recalibrate expectations. OpenAI’s revenue growth could slow to single digits by 2026, down from triple-digit rates that drove revenue to $13 billion in 2025.

Against a projected $74 billion operating loss by 2028, those growth figures create a solvency crisis that no amount of optimism can paper over.

Altman has admitted that “Google has been doing excellent work recently in every aspect”. I feel like this is the beginning of a shift for OpenAI as the default dominant AI company, from what was a clean 3 years of peacetime to now, very much a wartime scenario.

From a benchmark lens, Gemini 3 Pro now leads GPT-5.1 in reasoning and coding tasks. From a user lens, my new default model is Gemini 3 “Fast” mode for general Q&A tasks, and from a multimedia lens, Nano Banana Pro and Veo 3.1 are insanely impressive. This is beginning to neutralise OpenAI’s technical moat. We’re seeing this reflected across their recent roadmap of social-infused features (Sora 2 App and group chats in ChatGPT) as a last-ditch effort to push top-line revenue and seek virality.

Overall, this is part of a wider market correction, revealing that frontier LLMs are rapidly commoditising. OpenAI pioneered the technology, but it can’t defend margins when competitors match its capability.

Google wasn’t the first search engine in the dot-com boom. Many came and went before it. That’s why building a last-mover advantage matters more than anything in this hyper-competitive market, where owning the “stack” from chip to chatbot leads to the only real enduring moat.

And that’s precisely what Google’s been doing in its decade-long endeavour, quietly building its own infrastructure to power these models via its trusty tensor processing unit (TPU).

Idea I Learned

You Can Literally Build Anything Now

Google removed the information barrier in 1998. Facebook removed the people access barrier in 2004. Agentic AI removed the building barrier in 2024.

Every tool you need to build something significant exists right now. The constraints that stopped previous generations are gone.

Want to start a company? Build a product? Launch a service?

The infrastructure is there. The knowledge is accessible. The tools are powerful beyond anything we’ve seen before. We’re now entering an era where the gap between idea and execution is shrinking to nearly zero. What used to take teams of engineers and months of work can now happen in days.

That’s why I believe the missing piece today isn’t the tools, but rather the education we expose ourselves to. Most people don’t know these capabilities exist or how to use them. We need to teach this in schools and workplaces—show people what’s actually possible.

The barriers are down. Now we need to teach everyone how to walk through. Only the fear of failing is holding you back.

Quote to Share

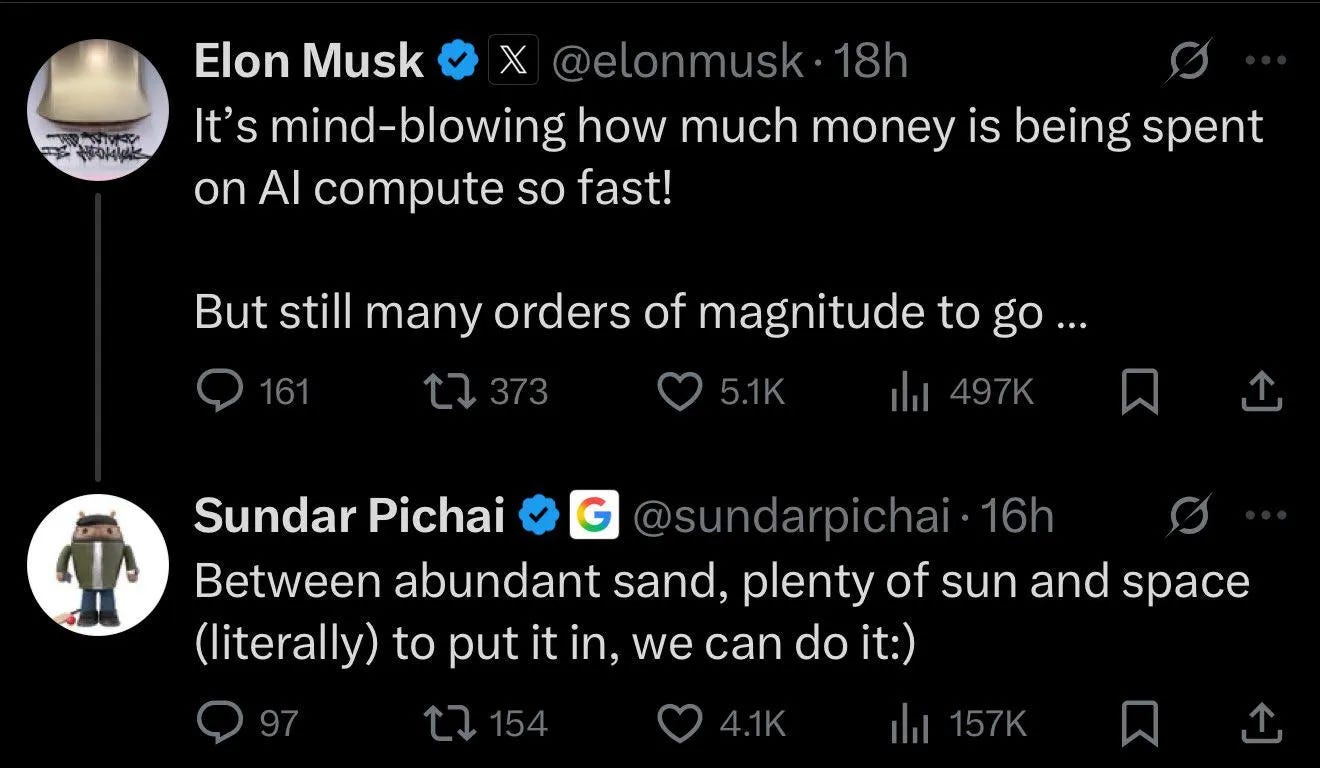

Elon Musk and Sundar Pichai on AI compute scaling:

Major tech companies like Amazon, Google, Meta, and Microsoft have already committed to investing over $300 billion in 2025 alone to build AI compute infrastructure.

What’s interesting to note is that AI compute demand is growing at over 2x the rate of Moore’s Law, subsequently creating a massive shortage of chips. Just to meet this current demand, $500 billion must be invested in data centres per year until 2030.

Data centres are now the new oil, and it seems as though setting up data centres in space (what Sundar seems to be referring to here) can only be achieved via SpaceX, which can launch them economically and at scale.

Source: Elon Musk and Sundar Pichai on X

Question to Ponder

“Google’s Nano Banana Pro is impressive, but will it ever become a full replacement for photo editors?”

It feels like we witnessed the ChatGPT moment for image generation this year. But will Gemini 3 Pro Image (or “Nano Banana Pro”) replace photo editors entirely? I don’t think so.

Here’s why: these models are fundamentally non-deterministic. Ask the same prompt with the same image twice, and you’ll get slightly different results each time. This isn’t a bug, but a natural consequence of how these models operate.

And honestly, this mirrors human creativity perfectly.

Give the same photo to two different editors, and they’ll produce completely different results. Their edits reflect their personal interpretation, creative vision, and artistic judgment. One might go bold and dramatic, another subtle and natural.

This debate reminds me of the “AI will replace programmers” argument that we’ve been having for years. Sure, we might need fewer programmers, but replacement? Never.

What’s happening instead is the democratisation of skills. Just as coding tools have made programming more accessible, AI image tools are making photo editing available to everyone, without requiring an expensive software license and specific tool use ability. Anyone can use natural language to “remove the background”, “increase the saturation”, or “put this image of a sombrero on this other image of my dog”.

The key differentiators will always be critical thinking, independence of thought, and clarity of judgment. Clear thinking is what separates someone who can use a tool from someone who can create magic with it.

That means your output is enhanced and multiplied by these models, not replaced by them.

Got a question about AI?

Reply to this email and I’ll pick one to answer next week 👍

💡 If you enjoyed this issue, share it with a friend.

See you next week,

Alex Banks

Wow, the part about the agent-first development platform really stands out. I am curious if this approach will change how we teach fundamental coding concepts to students.

What fascinates me most is not the race between models, but the shrinking distance between an idea and its execution.

Tools are catching up to human ambition - the real differentiator now is clarity of vision.

We’re entering an era where the biggest advantage isn’t better AI, but a better question.

-Double ID