GPT-5 Is Here, Google Takes Centre Stage, and ElevenLabs Strikes a Chord

Hey friends 👋 Happy Sunday.

Here’s your weekly dose of AI and insight.

Today’s Signal is brought to you by Pippit AI.

I just created a professional product video in 30 seconds. No camera, no models, no budget.

Meet Pippit AI. Upload any product image and watch their AI generate:

→ Professional product videos with virtual models

→ Clothing try-on content

→ Authentic-looking campaigns

Small businesses can now create content that rivals major brands — without the major brand budgets.

Sponsor The Signal to reach 50,000+ professionals.

AI Highlights

My top-3 picks of AI news this week.

OpenAI

1. GPT-5 is Here

OpenAI has unveiled GPT-5, their most advanced AI model yet, featuring a real-time router that automatically decides which model type to use when responding quickly versus when to engage deeper reasoning for complex problems.

Unified intelligence: GPT-5 combines a fast, efficient model for most queries with a deeper reasoning system (GPT-5 Thinking) that activates automatically based on conversation complexity and user intent.

Dramatic improvements: The model shows significant advances in coding (creating responsive websites and games in one prompt), health conversations (67% score on HealthBench vs 32% for GPT-4o), and writing with enhanced literary depth and rhythm.

Reduced hallucinations: GPT-5 responses are 45% less likely to contain factual errors than GPT-4o, with the thinking version showing 80% fewer errors than competing models, marking a significant leap in reliability.

Alex’s take: OpenAI has just gotten rid of five models overnight: GPT‑4o, o3, o4-mini, GPT‑4.1, and GPT‑4.5. I actually prefer this simplified structure with just three models to rule them all. GPT-5, GPT-5 Thinking, and GPT-5 Pro. I also believe it’s a clear plan to shift free users onto a paid Plus or Pro plan. I’ve personally found “GPT-5 Thinking” (accessible to both Plus and Pro users) to be far superior in terms of output quality across my internal tests. Moreover, “GPT-5 Pro” is the next leg up for research-grade intelligence, requiring a £200/month subscription. Intelligence really does command a premium. Alongside the chart screwup, people were expecting GPT-5 to be AGI. Disappointment is ultimately when reality doesn’t meet expectations, and this is why I believe there’s been a mixed reaction to the release so far.

2. Google Takes Centre Stage

Google has swooped in this week with a series of major announcements across its entire AI ecosystem.

Genie 3: A world model that generates interactive 720p environments in real-time at 24fps, maintaining consistency for several minutes and enabling users to navigate dynamic worlds from simple text prompts.

Enhanced Learning Suite: New Gemini features include Guided Learning for step-by-step problem solving, integrated visuals in explanations, and AI-powered study tools, plus a free one-year Pro plan for students globally.

AI Storybook Creation: Gemini now generates personalised 10-page illustrated stories with read-aloud narration in over 45 languages, allowing users to upload photos for custom artwork and choose from various art styles like claymation, anime, and comics.

Perch for Conservation: An updated bioacoustics AI model that helps scientists analyse wildlife audio data 50x faster, now trained on twice as much data covering mammals, amphibians, and underwater ecosystems to protect endangered species.

Alex’s take: Google is orchestrating a masterclass in AI strategy and shipping speed. Whilst competitors focus on optimising benchmarks, Google’s unbounded curiosity—as seen with its deluge of announcements only this week—marks it as a leading contender in the AI race. This coordinated ecosystem approach suggests Google sees what others miss: the winner won't be the company with the best chatbot, but the one that makes AI indispensable across every aspect of human life.

ElevenLabs

3. Elevenlabs Strikes a Chord

ElevenLabs has expanded beyond voice synthesis into AI music generation with the launch of Eleven Music, their new AI-powered music creation platform.

Studio-quality generation: Creates professional-grade tracks at 44.1kHz quality from simple text prompts, covering any genre or style with or without vocals in multiple languages.

Precision editing: Offers section-by-section generation and editing capabilities, allowing creators to craft seamless transitions, mood shifts, and detailed song structures with full control over duration, lyrics, and style.

Commercial-ready output: Produces tracks ready for immediate use across film, TV, podcasts, advertising, gaming, and social media, with an upcoming API for seamless integration into apps and workflows.

Alex’s take: ElevenLabs has built an empire on voice synthesis, and now they're applying that same audio expertise to music. What excites me most is their focus on commercial viability—too many AI music tools create impressive demos but fall short on licensing clarity. I think they're positioning to become the go-to solution for creators who need professional music without the traditional production overhead.

Content I Enjoyed

The Real in the Unreal

As the uncanny valley between reality and illusion blurs before our eyes, the world we live in is rapidly becoming a virtual one. Google blew us all away this week with Genie 3, and I believe this deserved a deeper dive beyond our AI highlights section.

Consider what we're actually looking at here. Genie 3 can simulate vibrant natural ecosystems with complex animal behaviours, create fantastical animated scenarios, and let you explore historical settings across time and geography. Plug this into the VR headset they announced at Google IO, and you've essentially built the metaverse—a persistent virtual world where users interact through avatars in immersive 3D environments.

While Genie 3 currently can't simulate real-world locations with perfect geographic accuracy, Google has something none of its competitors possess: comprehensive 3D mapping data of virtually every location on Earth. That advantage is invaluable right now. It must have been an interesting week at Meta HQ as Google edges one step closer to Mark’s metaverse.

Soon we'll have access to photorealistic environments indistinguishable from reality, where you can walk through ancient Rome, explore alien landscapes, or attend meetings in impossible architectural spaces.

After getting excited about all the possibilities this new technology could bring, I couldn't help but hear Robin Williams' quote from Good Will Hunting in the back of my mind: "If I asked you about art, you'd probably give me the skinny on every art book ever written... But I'll bet you can't tell me what it smells like in the Sistine Chapel."

That feeling of wind on your face, sun warming your skin, or the scent of rain-soaked earth—these can only be experienced in actual reality (for now). As we race toward perfect simulations, we risk forgetting that some of life's most profound moments happen when we step outside and engage with the beautifully imperfect real world. I think it comes back to the individual and what "living" means to you.

Idea I Learned

The Invisible Foundation

Revolutionary technologies emerge from foundations we never notice. Joey Politano’s latest graph perfectly captures this dynamic, showing US data centre construction spending hitting a record $40 billion annualised in June—the first time we've crossed this threshold, with a 28% increase from last year and a 190% leap since ChatGPT’s launch in November 2022 (nearly three years ago).

The scale becomes even more striking when you consider how close we’re getting to spending more on data centres than office buildings. Just five years ago, office construction spending was 7x larger than data centre spending. Now, that gap has almost disappeared entirely.

It reminds me a lot of the original internet infrastructure buildout during the dot-com era. Companies laid over 80 million miles of fibre optic cables across the United States—enough to circle the Earth 1,500 times. The overcapacity was enormous: even four years after the bubble burst, 85% of those fibre lines remained dark and unused.

Yet, that “wasteful” overbuilding enabled YouTube. When the platform launched in 2005, video streaming seemed financially impossible due to bandwidth costs. Yet thanks to the telecom industry’s overbuild on the supply side, bandwidth had become cheap enough to make free video hosting viable. Google acquired YouTube for $1.65 billion just a year later, and it now generates $31 billion annually.

Today’s AI infrastructure boom feels eerily similar, with Nvidia’s revenue rocketing to $130 billion as companies race to build tomorrow's digital backbone.

Quote to Share

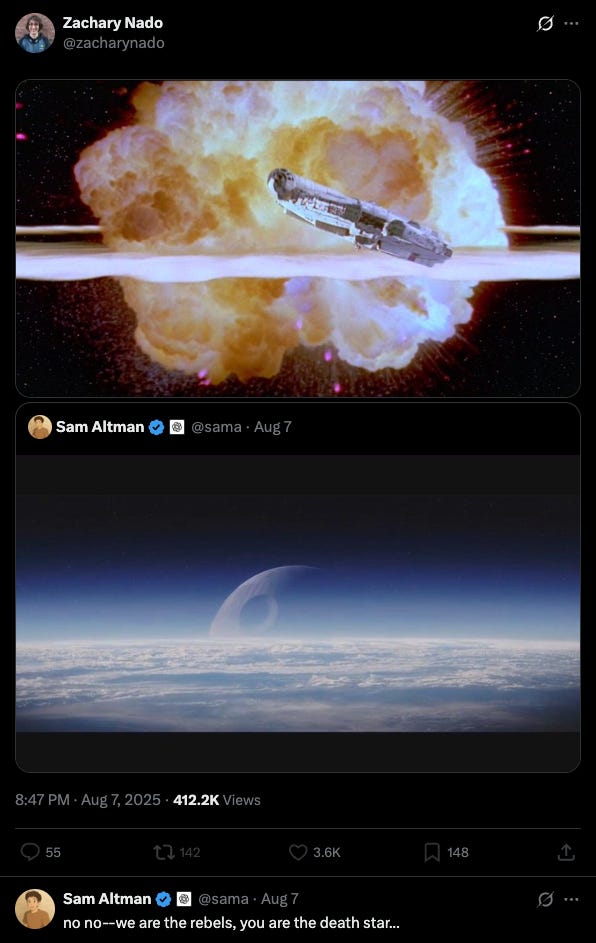

Sam Altman on the AI power dynamic:

This was Sam Altman's response to a Google employee’s Star Wars meme that positioned Google as the scrappy rebels fighting the OpenAI Death Star.

Altman's pushback reveals something fascinating about how these companies see themselves. OpenAI, despite being valued at $300 billion, still frames itself as the underdog taking on Big Tech’s empire.

But who’s really the rebel here? Google has the entire ecosystem—search, Android, YouTube, cloud infrastructure—and generates $371 billion in annual revenue. OpenAI has $13 billion in ARR but trades at a 23x revenue multiple compared to Google's 6x.

The valuation gap tells the story: investors are betting on disruption over dominance. OpenAI represents the “what if” scenario where AI reshapes everything. Google represents the “what is” of entrenched tech power.

The “rebel” narrative gets complicated when you consider OpenAI's evolution from a nonprofit founded “to ensure artificial intelligence benefits all of humanity” to a for-profit entity facing criticism from Nobel laureates and safety advocates for abandoning its ethical mission. As Geoffrey Hinton warned: “Allowing it to tear all of that up when it becomes inconvenient sends a very bad message.”

Altman's rebel framing feels more urgent given GPT-5's mixed reception this week—bugs, transparency issues, and user complaints about quality. Meanwhile, Google stole the spotlight with Genie 3, a breakthrough world model that generates interactive 3D environments from text.

The irony is rich: the company that abandoned its charitable mission now claims rebel status against the empire that never pretended to be anything other than what it is.

Source: Sam Altman on X

Question to Ponder

“With OpenAI dominating headlines and Google pushing Gemini hard, which AI company do you think is being underestimated in the current LLM race?”

The true underdog for me currently is Anthropic.

This week, they quietly released Claude Opus 4.1, and their understated announcements give a quiet confidence that I love. They released the Model Context Protocol (MCP) in November 2024, an open standard, open-source framework to standardise the way AI systems integrate with external tools.

Given the ownership of this infrastructure, they enabled MCP integration with Claude that lets you connect your emails, documents, and other data sources across your whole personal ecosystem—this is something you can't do with ChatGPT (yet).

For me, Claude gives the best output for writing and coding, and the whole energy of Claude feels like collaborating with a fellow colleague who you’d happily share a coffee with. I'm looking forward to when they enable persistent memory, as I think this will truly set them apart from other LLMs in the space.

But never say never—I'm sure by the end of the year we'll see many big announcements that might make me shift my current loyalty.

Got a question about AI?

Reply to this email and I’ll pick one to answer next week 👍

💡 If you enjoyed this issue, share it with a friend.

See you next week,

Alex Banks

As all most appreciated Way, I have to keep up-to-date. Thank you.

I like the new look. Much better.