NVIDIA Gets Physical, Gmail's Gemini Glow-Up, and ChatGPT Will See You Now

Hey friends 👋 Happy Sunday.

Here’s your weekly dose of AI and insight.

It turns out this week’s NotebookLM guide was a real hit with Pro members. So I’m keeping the momentum going. Next week, I’m dropping a complete breakdown of Claude’s Skills feature. Most people are using Claude like a chatbot. I’ll show you how to turn it into a custom AI workforce that actually knows your business. Upgrade today to get the full guide and every future issue.

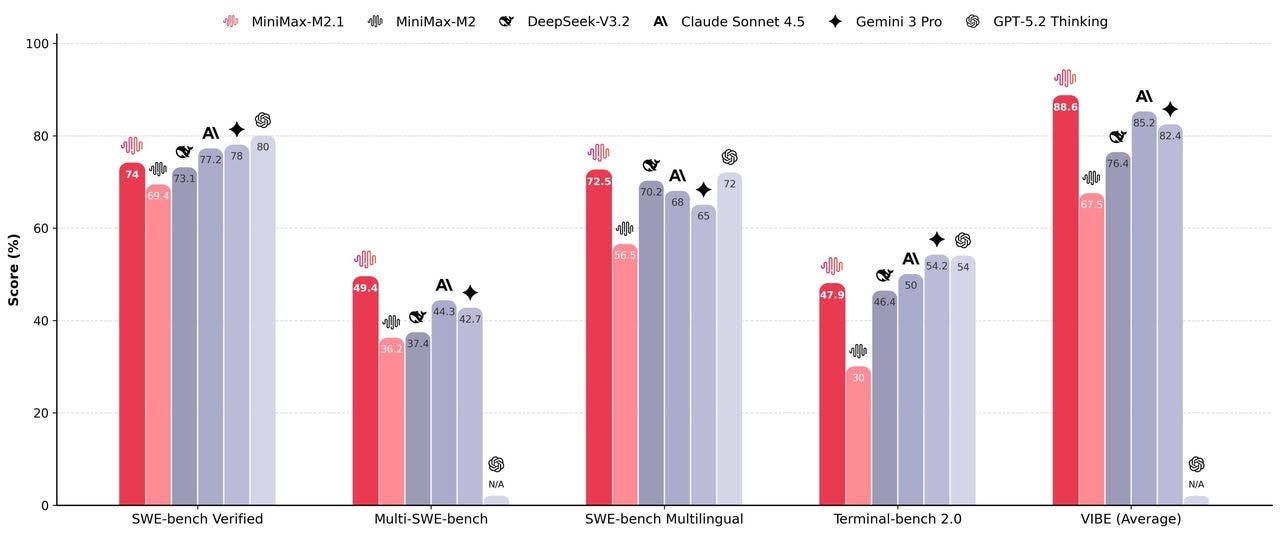

Today’s Signal is brought to you by MiniMax.

MiniMax M2.1 is OPEN SOURCE: SOTA for real-world dev & agents

It outperforms Claude Sonnet 4.5 and Gemini 3 Pro on every major coding benchmark: SWE, VIBE, Multi-SWE.

→ #1 open-source model on Code Arena

→ #1 on Hugging Face

→ Runs locally on a 230B MoE architecture

200k+ builders are already shipping with it.

AI Highlights

My top-3 picks of AI news this week.

NVIDIA

1. NVIDIA Gets Physical

NVIDIA dominated CES with a laser focus on “physical AI”, the application of AI to robotics, autonomous vehicles, and real-world environments that goes beyond text and images.

Alpamayo goes open: NVIDIA released the first open-source reasoning vision language action (VLA) model for autonomous driving. The 10-billion-parameter Alpamayo 1 reasons through decisions step-by-step, explaining why it’s making each move. Available now alongside 1,700+ hours of open driving data.

Cosmos for simulation: New open-source foundation models trained on massive video and robotics datasets can generate realistic driving scenarios, model edge cases, and run closed-loop simulations, enabling developers to train autonomous systems in virtual worlds before they touch pavement.

Rubin enters production: NVIDIA’s next-gen Rubin AI platform featuring six co-designed chips is now in full production, promising tokens at one-tenth the cost of Blackwell. Full commercial rollout expected H2 2026.

Alex’s take: I quite likes Jensen’s framing: "The ChatGPT moment for physical AI is here." NVIDIA is betting that the next AI gold rush is about machines that understand and navigate the physical world. By open-sourcing Alpamayo and Cosmos, they're trying to become the default infrastructure layer for robotics and autonomy the same way they did for LLMs. This is a smart play, especially if every robotaxi and warehouse robot runs on NVIDIA's stack, the hardware moat gets even deeper.

2. Gmail’s Gemini Glow-Up

Google is embedding Gemini 3 directly into Gmail, transforming it from a communication tool into a proactive inbox assistant for its 3 billion users.

AI Overviews: Ask your inbox questions in natural language and get synthesised answers. Long email threads get automatic summaries. No more digging through a year of messages to find that plumber’s quote.

Writing assistance for everyone: Help Me Write, Suggested Replies, and Proofread are now available to all users. The AI learns your tone and style, offering one-click responses that sound like you actually wrote them.

AI Inbox (coming soon): A new view that filters out noise and surfaces what matters. It identifies your VIPs, highlights to-dos, and keeps you up to date on high-stakes items like bills due tomorrow.

Alex’s take: Google revolutionised email in 2004. They're doing it again 22 years later. Google’s distribution here is unmatched, especially with Gemini now embedded where 3 billion users already live. AI is very much becoming invisible infrastructure baked into tools we use daily, not a separate app we have to open. I'd love to see Google prioritise smarter phishing detection as part of this rollout. With AI reading your inbox anyway, catching dangerous emails and educating users on threats feels like a natural next step.

OpenAI

3. ChatGPT Will See You Now

OpenAI launched two healthcare products this week: ChatGPT Health for consumers and ChatGPT for Healthcare for enterprises, its biggest push into the medical sector yet.

Consumer play: ChatGPT Health lets users connect medical records, Apple Health, MyFitnessPal, and other wellness apps to get personalised health advice. OpenAI says 230 million people already ask health questions on ChatGPT weekly.

Enterprise expansion: ChatGPT for Healthcare gives hospitals a HIPAA-compliant workspace powered by GPT-5 models trained with physicians. Boston Children’s, Cedars-Sinai, Stanford Medicine, and HCA Healthcare are already rolling it out.

Bold claims: OpenAI says GPT-5.2 outperforms human baselines across every role measured in their GDPval benchmark, setting the stage for AI to handle increasingly complex clinical reasoning.

Alex’s take: This feels like classic Meta strategy. Watch startups validate a vertical, then absorb it. Meta copied Stories from Snapchat, Reels from TikTok, Threads from Twitter. OpenAI is running the same playbook here with healthcare AI startups like OpenEvidence proving the market, and now ChatGPT Health swooping in with 230 million weekly health queries already in the bag. Distribution wins. Also, this meme about using ChatGPT for health questions hit a little too close to home.

Content I Enjoyed

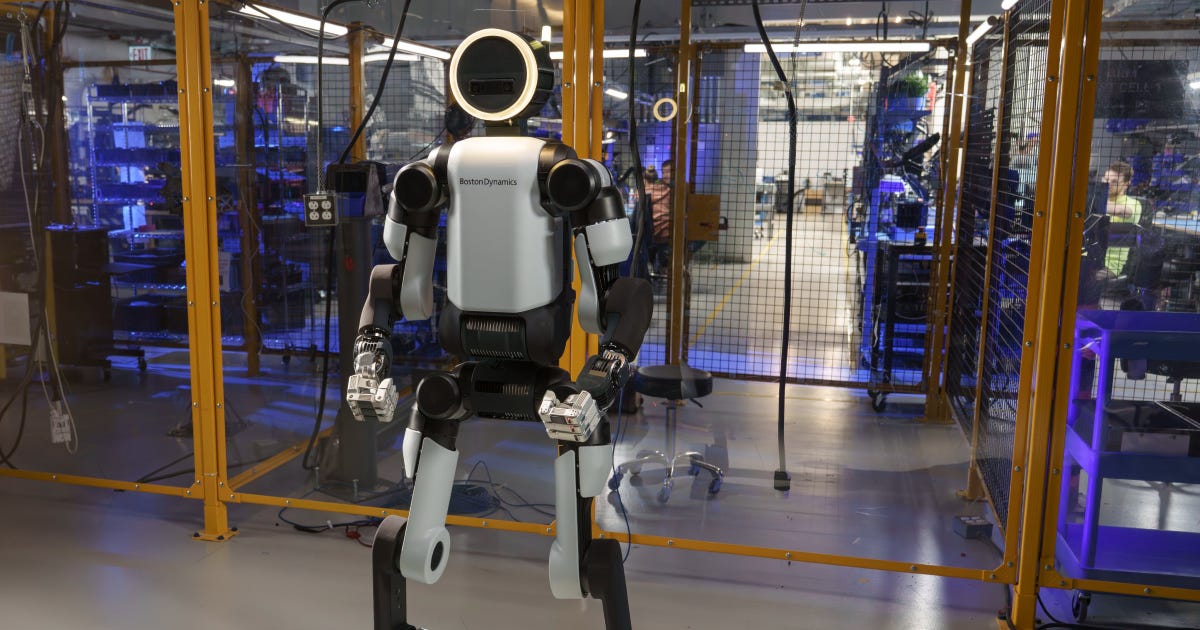

Boston Dynamics and DeepMind Join Forces.

Boston Dynamics just unveiled its upgraded electric Atlas humanoid at CES 2026, and the robotics world is paying attention. The demo video has racked up over 1.6 million views.

What caught my eye was the partnership announcement with Google DeepMind to integrate Gemini Robotics into Atlas. This addresses what’s been the missing piece for years, where hardware teams have solved the locomotion challenges, but intelligence and local compute lagged behind.

The specs are also impressive with 56 degrees of freedom, 4-hour battery life, self-swapping batteries for continuous operation, and a 1.9-metre frame designed specifically for factory work. Hyundai plans to deploy 30,000 units in their manufacturing facilities starting this year.

Whilst some argue the humanoid form is suboptimal—why limit robots to human-like movements when joints can rotate in ways ours can’t—it’s clear Atlas already exploits this advantage, with 360-degree torso rotations that are more energy-efficient than stepping. What’s more, the humanoid form serves as a universal worker, much like a human.

We only need to look to China to see the real competition intensifying. Chinese competitor Unitree already ships robots for $70k with similar battery-swap capabilities. We’re watching the early stages of an industry that could reshape entire sectors within the decade.

Idea I Learned

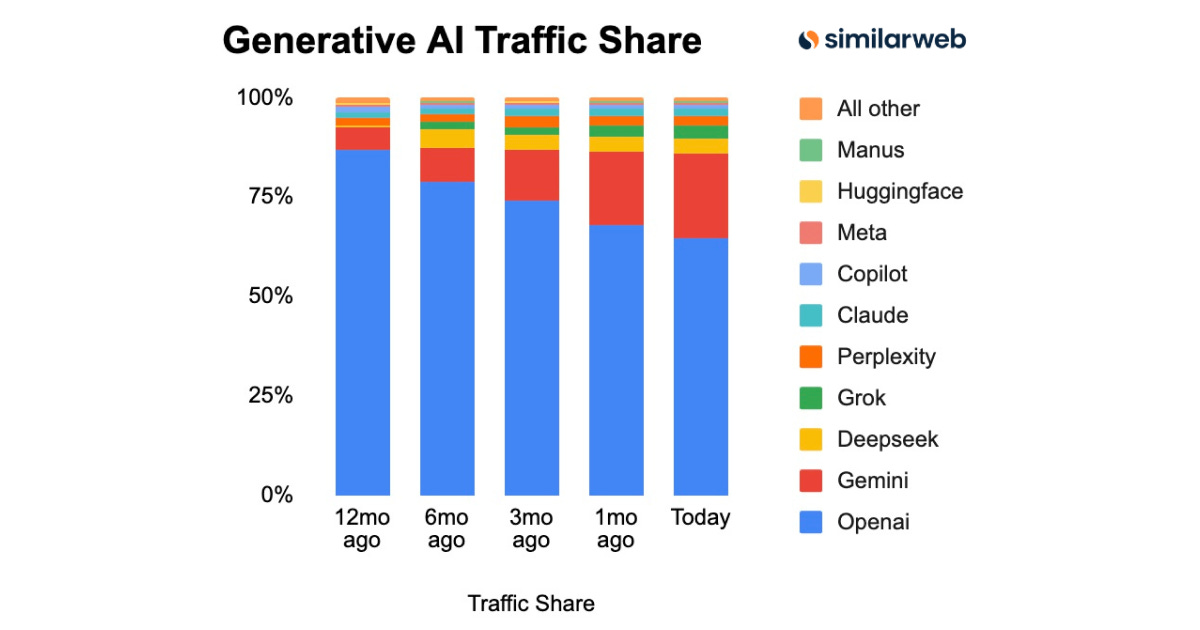

ChatGPT Lost 22 Points in 12 Months

The first Similarweb AI Tracker of 2026 was released this week, and the headline numbers speak for themselves. ChatGPT commanded 86.7% of generative AI traffic twelve months ago. Today it sits at 64.5%.

That’s a 22 percentage point decline in one year. Meanwhile, Gemini nearly quadrupled from 5.7% to 21.5%. Google’s patient infrastructure play is finally converting to consumer adoption.

But I must remark on the completeness of this data. It only captures website visits. API usage and integrations don’t show up at all.

This matters because the professional use case looks completely different from consumer traffic. Claude sits at 2% in these charts, but Anthropic’s models have become the default for developers and coding workflows. Every time someone uses Cursor, Windsurf, or an AI-powered IDE, that usage is invisible to web traffic metrics.

The same applies to enterprise deployments. Companies embedding AI into their products aren’t routing employees through chatgpt.com. They’re making API calls that never touch a browser.

So we’re looking at two parallel markets here. Consumer chatbots, where ChatGPT still leads but is bleeding market share monthly. And developer infrastructure, where the competitive picture looks entirely different.

OpenAI knows this. Their pivot toward agents, their enterprise push, their rumoured hardware plays, all of it signals a company trying to lock in users before the switching costs disappear entirely.

Whilst these web traffic numbers tell us something real about consumer mindshare, the AI race is far from being won exclusively inside browser tabs. We must look further to the codebases and APIs that these charts can’t see.

Quote to Share

Social Capital founder Chamath Palihapitiya on LLMs:

I thought this was a compelling analogy. Refrigeration was a transformative infrastructure. But the inventors didn’t capture most of the value. Instead, it was the companies that built on top of it who made the real money.

Palihapitiya has been making this point since early 2023, attributing the original insight to Warren Buffett. The argument is that OpenAI, Anthropic, and Google—these are the refrigerator makers. They’ll do fine. But the real fortunes will come from whoever figures out how to use these models to build something entirely new.

Three years later, we’re still waiting to see who that is. Microsoft has bolted AI onto Office. Google has added it to search. Salesforce to CRM. These are refrigerators in boardrooms, not empires being built.

The more interesting part of his thesis is that if you give the same inputs to different companies, they’ll get the same machine learning outputs. Differentiation only comes from unique data. The empire goes to whoever owns the data.

Source: The State of Investing with Chamath Palihapitiya | Global Alts 2023

Question to Ponder

With AI transcription tools becoming more accurate, how should content creators and businesses be thinking about speech-to-text in their workflows?

The transcription space just hit a new milestone.

ElevenLabs released Scribe v2 this week, which claims the lowest word error rate ever recorded on industry-standard benchmarks (across 90+ languages).

There are a few features I thought it would be worth highlighting that really show where this technology is heading.

First up, keyterm prompting lets you feed the model up to 100 domain-specific terms—technical jargon, brand names, industry language—and it contextually decides when to use them. This solves a persistent problem for anyone transcribing specialised content. I’ve found this especially helpful in AI, where names such as “Groq” or benchmark names such as “MMLU” come into play.

It also has built-in entity detection that automatically identifies sensitive information across 56 categories, from PII to health data to payment details. This is especially helpful for compliance-heavy industries, as it removes a manual review step entirely.

Automatic multi-language transcription handles code-switching without manual segmentation. This means you can send audio with three languages in one file, and it just figures it out.

For content creators and businesses, I believe AI transcription has now passed the “good enough” threshold, meaning the emphasis is on which workflows you can now automate that were previously too time-intensive or error-prone to attempt.

💡 If you enjoyed this issue, share it with a friend.

See you next week,

Alex BanksP.S. This is the most dexterous task I’ve seen a humanoid do so far.

I wonder how marketshare is calculated.

Soon doing your emails will involve AI. And the chatbot-only interface will be one of many ways to interact with a LLM.

That reshapes the marketshare conversation completely.

Great article. The updates and insights on AI transcription tools were especially solid.

Really glad you brought the audio back. Sunday morning listening was the perfect mental warm-up for the week ahead.

Keep up the great work, Alex. What you’re building in this space genuinely matters.