Hey friends 👋 Happy Sunday.

Each week, I send The Signal to give you essential AI news, breakdowns, and insights—completely free. Busy professionals can now join our community for in-depth AI workflows, insider announcements, and exclusive group chat access.

AI Highlights

My top-3 picks of AI news this week.

Oracle

1. Oracle’s AI World Takeover

Oracle announced a comprehensive AI infrastructure and platform strategy at Oracle AI World, positioning itself as the only hyperscaler building across all three layers of the AI stack.

Infrastructure dominance: OCI Zettascale10 connects up to 800,000 NVIDIA GPUs across multi-gigawatt clusters—the largest AI supercomputer in the cloud, powering Project Stargate with OpenAI in Abilene, Texas.

Platform integration: AI Database 26ai architects AI directly into the database core with unified hybrid vector search and MCP server support, whilst AI Agent Studio supports major LLM providers with one-click deployment.

Application layer: Pre-built AI agents embedded natively in Oracle Fusion Applications, where employees already work with no app switching required.

Alex’s take: Most cloud providers are competing for a single layer. Oracle is building vertically across infrastructure, platform, and applications. It was brilliant being there in person and watching Larry Ellison’s keynote, where he compared AI training to the scale of railroads and the industrial revolution. Oracle is building the rails, the trains, and the stations today that will power the frontier models of tomorrow.

2. Google’s Veo 3.1 Gets Cinematic

Google released Veo 3.1 and Veo 3.1 Fast in paid preview through Flow, their AI-powered video creation tool, and the Gemini API, with significant upgrades to its video generation capabilities.

Enhanced quality: The model generates richer native audio with natural conversations and synchronised sound effects, plus improved understanding of cinematic styles and better prompt adherence.

Multi-modal control: Developers can now combine text prompts with up to 3 reference images and define both start and end frames, offering unprecedented creative direction over generated content.

Extended output: Scene extension allows videos to exceed one minute by generating clips that connect to previous footage, maintaining visual continuity across the entire sequence.

Alex’s take: Maintaining character consistency across shots has been the Achilles’ heel of AI video generation—solving this with reference images unlocks genuine narrative storytelling rather than just isolated clips. When you combine that with scene extension and frame-to-frame transitions, you’re looking at the early scaffolding for AI-native filmmaking.

Anthropic

3. Claude’s New Skills

Anthropic has launched Agent Skills, allowing Claude to access specialised instructions, scripts, and resources for specific tasks across all its products.

Just ask Claude: Creating custom skills requires no technical expertise—simply describe your workflow to Claude’s “skill-creator” skill, and it handles the folder structure, formatting, and resource bundling automatically.

Intelligent activation: Claude automatically scans and loads only relevant skills when needed, keeping performance fast whilst accessing specialised expertise for tasks like Excel manipulation or brand guideline adherence.

Universal compatibility: Skills work seamlessly across Claude apps (for Pro, Max, Team, and Enterprise users), the API via the new /v1/skills endpoint, and Claude Code through plugin installation.

Alex’s take: This is a significant shift in how we think about LLM capabilities. Rather than cramming everything into context windows or fine-tuning models, Skills let you modularise expertise and load it on demand. To get started, ask Claude to create a skill on a new topic or navigate to Settings → Capabilities → Skills and try some of the skills provided by Anthropic by toggling them on.

Content I Enjoyed

Why LLMs Don’t Learn Like Humans

Andrej Karpathy sat down with Dwarkesh Patel to discuss the fundamental limitations of how large language models learn, and his insights cut through the hype around current AI capabilities.

The core issue? Reinforcement learning, which Karpathy describes as “sucking supervision bits through a straw.” When an AI gets the right answer, it reinforces everything that led to that answer—even the wrong turns and irrelevant steps along the way. As he puts it, “Reinforcement learning is terrible. It just so happens that everything that we had before is much worse.”

What humans do differently is crucial. When we read, we’re prompted to generate our own understanding—manipulating information to gain knowledge. LLMs have no equivalent during pretraining. Karpathy envisions “some kind of stage where the model thinks through the material and tries to reconcile it with what it already knows.”

The roadblock? Model collapse. When you ask an LLM to reflect on a chapter 10 times, “you’ll notice that all of them are the same.” Unlike humans, LLMs lack the richness, diversity, and entropy in their synthetic reasoning. Maintaining that entropy during training remains an unsolved research problem.

His most provocative insight: “I’d love to have them have less memory so that they have to look things up and they only maintain the algorithms for thought.” Fallible human memory forces us to learn generalisable patterns rather than memorise specifics—a feature, not a bug, that current LLMs desperately need.

Idea I Learned

Western Executives Are Coming Back From China Terrified

Western executives visiting China are returning with a sobering message: we’re falling behind.

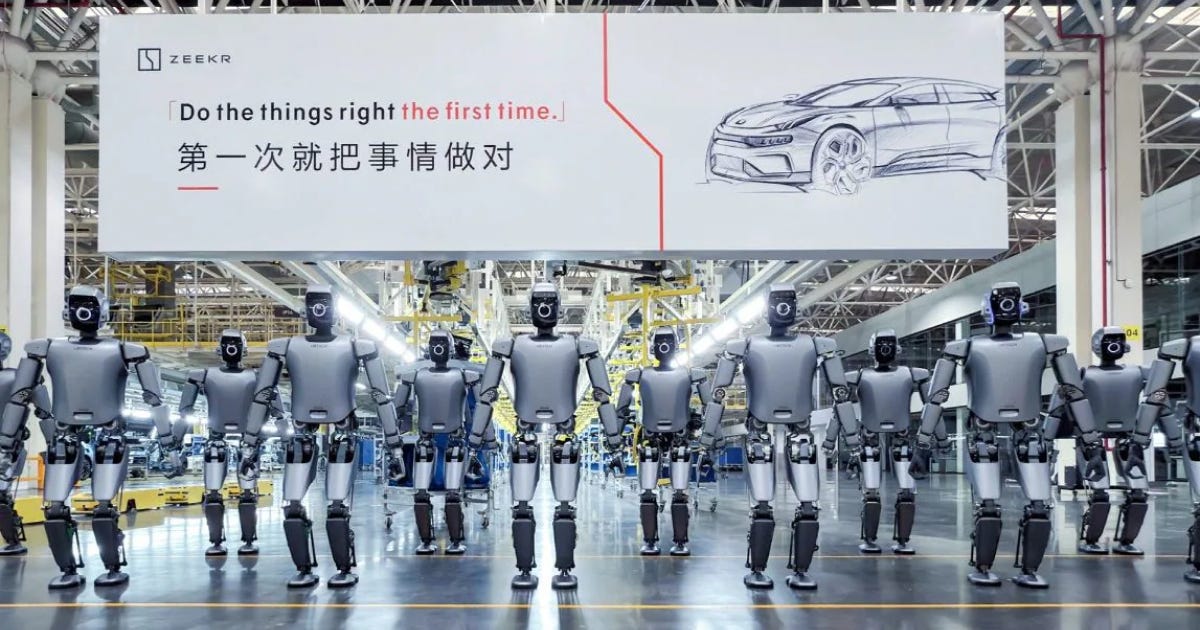

Ford’s CEO Jim Farley called his recent factory tour “the most humbling thing I’ve ever seen.” He witnessed Chinese manufacturers producing vehicles with superior quality at lower costs than anything in the West.

Why? As Palmer Luckey recently noted on the Joe Rogan podcast: “Because the cost of resource extraction is lower. The cost of making steel and aluminium is lower. The cost of building a factory is lower. And that’s why you’re able to buy an awesome car for $10,000 in China.”

China added 295,000 industrial robots last year. The US added 34,000. The UK? Just 2,500.

Australian billionaire Andrew Forrest describes walking 900 metres alongside a conveyor belt where machines emerge from the floor, assemble parts, and produce a complete truck—without a single human worker.

These “dark factories” operate with minimal lighting because robots don’t need to see. China now has 567 robots per 10,000 manufacturing workers, compared to 307 in the US and 104 in the UK.

This automation surge is already reshaping industries. Chinese EV maker BYD multiplied its UK sales tenfold in September, overtaking established brands like Mini and Land Rover.

Reading this article reminded me of what Brett Adcock argued this week at Dreamforce 2025, stating that the company solving general-purpose humanoid robots will be worth 200 times Apple’s valuation—and Chinese firms like Unitree are advancing rapidly in this space.

Meanwhile, Jensen Huang noted that NVIDIA went from 95% market share in China to zero due to chip bans. This forces Chinese AI labs like DeepSeek to develop domestic alternatives, which will inevitably accelerate China’s semiconductor independence.

The West is not only losing a manufacturing race, but we’re also watching China build the infrastructure for the next industrial era while we add robots at a fraction of their pace.

Quote to Share

Bloomberg on Walmart’s partnership with OpenAI:

“Walmart Inc. is teaming up with OpenAI to enable shoppers to browse and purchase its products on ChatGPT, the retailer’s latest push to incorporate artificial intelligence.”

It seems like this might be the next big evolution in shopping. We’ve gone from in-store purchases to online retailers, to the bundling of commerce on Amazon, to now AI-driven purchases.

Users can browse and purchase products from Walmart and Sam’s Club with a simple “buy” button, with their accounts automatically linking to ChatGPT. What’s more, OpenAI has similar deals with Etsy and Shopify, signalling a broader trend toward conversational commerce.

I think the timing is notable too—just days after Sam Altman announced ChatGPT would relax usage restrictions and introduce age-gating for adult content. It seems ChatGPT has evolved a lot recently, beyond the pure chat engine released in 2022. We’re now approaching a platform where you can shop, get unfiltered responses, and soon handle more complex tasks.

Source: Bloomberg

Question to Ponder

“This graph shows ChatGPT reached 800 million users in just 3 years, while the internet took 13 years to reach the same milestone. Does this mean AI is genuinely progressing at an unprecedented rate?”

The comparison is misleading.

ChatGPT rode the internet’s existing infrastructure. The internet had to build cables, install modems, and convince people to buy computers. ChatGPT just needed a web browser.

So the story is more about compounding infrastructure vs apples-to-apples adoption speed.

The internet took 13 years to build the foundation. Everything that follows leverages that foundation and naturally spreads faster. Social media platforms like Instagram and TikTok hit similar adoption curves because the groundwork was already laid.

However, dismissing the comparison entirely misses the point.

AI’s rapid proliferation means its impact will be felt immediately and simultaneously across society. The internet gradually transformed different sectors over decades. AI is hitting everything at once.

The accessibility is unprecedented. There are no downloads, no hardware to purchase, no learning curves to ride. Just type and receive on a web browser. This democratisation means AI’s effects—the good and bad—will compound faster than any previous technology before.

So yes, the graph is a flawed comparison. But the underlying truth holds: because AI builds on existing infrastructure, its societal impact will accelerate beyond what we experienced with the internet itself.

Got a question about AI?

Reply to this email and I’ll pick one to answer next week 👍

💡 If you enjoyed this issue, share it with a friend.

See you next week,

Alex BanksP.S. Anduril Unveils EagleEye AI Helmet for US Army.

Fascinating. Thanks for clearly explaining Oracle's vertical stratgey; it's quite presicent.